Sometimes you find a team and a technology that is just poised to take off. Snowflake was at that point when we invested in Snowflake in the very early part of 2017. Snowflake did not look like other companies we had invested in up to that time – it was an actual Snowflake for us. The stage and valuation made it a stretch for our core investment fund which focuses on seed and Series A (Investments like Snowflake are one reason we raised our Acceleration Fund in order to invest in and work with companies already quickly scaling). But our conviction about the team, the opportunity, and the early traction was high and continues to be so on this momentous day.

This week is another beginning for the company – and an exciting milestone – an IPO and becoming a publicly traded company (NYSE: SNOW). The IPO reflects a fulfilling journey over the last 8 years building a successful cloud infrastructure service while the public cloud vendors continued to scale, and enterprise adoption of the cloud took off. We believe this is just the beginning of what is possible for Snowflake in the future.

In our 25-year history at Madrona, we have been fortunate to invest and participate in many companies that have completed the IPO milestone. Notably, Snowflake is the 6th company in our portfolio to go through the IPO milestone in the last 4 years.

The main reasons we invested in Snowflake in early 2017 came down to team, technology and the market.

- Snowflake’s founding team (Benoit Dageville, Thierry Cruanes, Marcin Zukowski) had built an incredible cloud native data-warehouse that had incredible room to grow – as the enterprise adoption increased and feature sets were built. And with a seasoned executive in Bob Muglia as CEO – it was a world-class team.

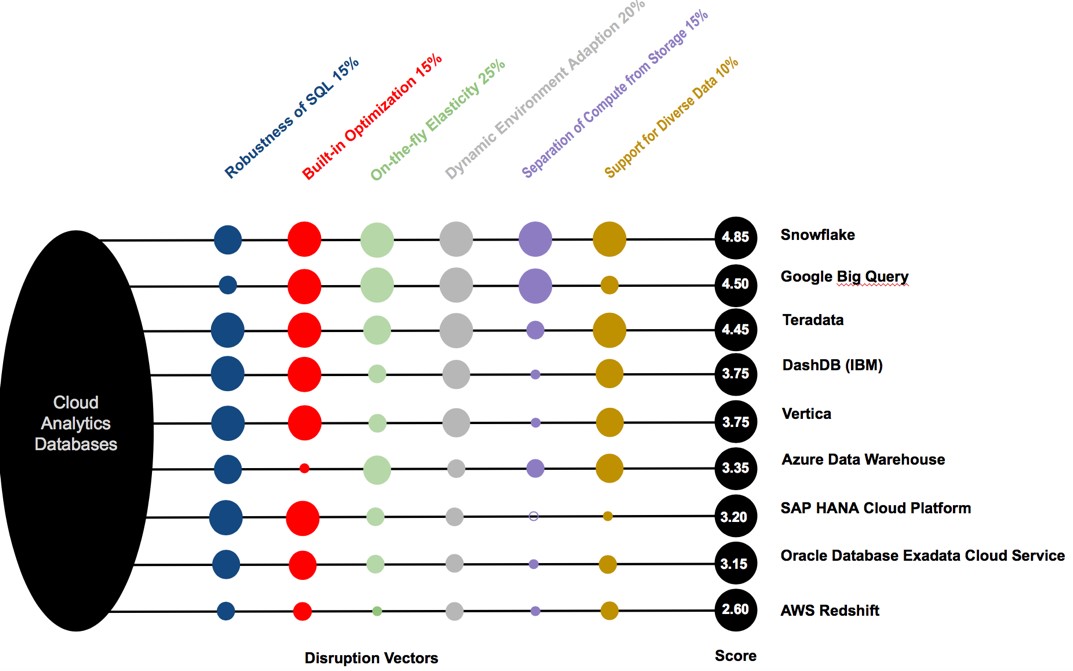

- Snowflake’s product was truly revolutionary in terms of architecture (designed for the cloud from day1) and as a result allowed for superior performance, scale and capabilities.

- We believed in Snowflake’s pursuit of the secular trend of cloud-first and cloud-only infrastructure and more importantly data in the cloud (today it seems a no-brainer) and hence we saw a massive opportunity.

- We knew we could bring our relationship and deep connections with Amazon and Microsoft to help Snowflake partner with them to scale their business, given Snowflake’s intent to run on multiple public clouds. Snowflake has strong and solid relationships today with both companies that are the two largest public cloud providers in the world.

Snowflake had also made the decision then to set up an engineering office in the Greater Seattle area (Bellevue), and we worked hard to help them build their team here with outstanding talent.

One of the things that I remember vividly in some of the earlier conversations we had with Bob was how they were able to successfully utilize the land and expand sales pattern that enterprise software companies aspire to – starting at $20k/annually and rising in quick succession to multiples of that amount. This was a great validation in terms of how critical Snowflake could quickly become in how enterprise companies utilize their data. And this was just on AWS at that time. Today Snowflake runs on all three of the major public clouds.

Fast forward to early 2019 when Frank Slootman (with a tremendous track record and accomplishments across Data Domain/EMC and ServiceNow) came on board as the CEO. In the last year and a half Frank and his leadership team have continued the transformation of Snowflake from a cloud data warehouse platform to a cloud data platform.

Over my career in tech leading large business groups at Microsoft, I worked toward and witnessed growth curves like this and together with the experience of being a part of the Snowflake journey, here are some lessons for companies embarking on this growth journey:

- Build a unique, differentiated, superior product that can help build a strong moat over time.

- Deliver a product experience that provides a seamless, friction-free and self-serve on-ramp, to enable a bottom-up go-to-market model that can scale organically and fast.

- Drive hard to a “land grab” and “growth” mode, when you see a massive market opportunity, while continuing to pay attention to unit economics. Grow fast and responsibly.

- Build a culture that values and prioritizes customer focus and customer obsession from day one.

- Hire the best and brightest. Every hiring decision is critical and creates a force multiplier. Everybody makes hiring mistakes – focus on minimizing them and pay attention to hiring great people that can fit in culturally.

Earlier this year, Frank Slootman and the Snowflake team unveiled their Data Cloud vision – a comprehensive data platform play on the cloud to completely mobilize your data in the service of your business. With that as a backdrop, I am eagerly looking forward to what Snowflake is going to accomplish in the coming years.

A hearty congratulations to everybody on the Snowflake team! Thank you for the opportunity to be a part of the Snowflake journey.