2018 was a busy year for Madrona and our portfolio companies. We raised our latest $300 million Fund VII, and we made 45 investments totaling ~$130 million. We also had several successful up-rounds and company exits with a combined increase of over $800 million in fund value and over $600 million in investor realized returns. We don’t see 2019 letting up, despite the somewhat volatile public markets. Over the past year we have continued to develop our investment themes as the technology and business markets developed and we lay out our key themes here.

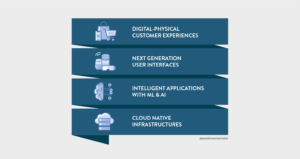

For the past several years, Madrona has primarily been investing against a 3-layer innovation stack that includes cloud-native infrastructure at the bottom, intelligent applications (powered by data and data science) in the middle, and multi-sense user interfaces between humans and content/computing at the top. As 2019 kicks off, we thought it would be helpful to outline our updated, 4-layer model and highlight some key questions we are asking within these categories to facilitate ongoing conversations with entrepreneurs and others in the innovation economy.

For reference, we published our investment themes in previous years and our thinking since then has both expanded and become more focused as the market has matured and innovation has continued. A quick scan of this prior post illustrates our on-going focus on cloud infrastructure, intelligent applications, ML, edge computing, and security, as well as how our thinking has evolved.

Opportunities abound within AND across these four layers. Infinitely scalable and flexible cloud infrastructure is essential to train data models and build intelligent applications. Intelligent applications including natural language processing models or image recognition models power the multi-sense user interfaces like voice activation and image search that we increasingly experience on smartphones and home devices (Amazon Echo Show, Google Home). Further, when those services are leveraged to help solve a physical world problem, we end up with compelling end-user services like Booster Fuels in the USA or Luckin Coffee in China.

The new layer that we are spending considerable time on is the intersection between digital and physical experiences (DiPhy for short), particularly as it relates to consumer experiences and health care. For consumers, DiPhy experiences address a consumer need and resolve an end-user problem better than a solely digital or solely physical experience could. Madrona companies like Indochino, Pro.com and Rover.com provide solutions in these areas. In a different way, DiPhy is strongly represented in Seattle at the intersection of machine learning and health care with the incredible research and innovations coming out of the University of Washington Institute for Protein Design, the Allen Institute and the Fred Hutch Cancer Research Center. We are exploring the ways that Madrona can bring our “full stack” expertise to these health care related areas as well.

While continuing to push our curiosity and learning around these themes, they are guides not guardrails. We are finding some of the most compelling ideas and company founders where these layers intersect. Current company examples include voice and ML applied to the problem of physician documentation into electronic medical records (Saykara), integrating customer data across disparate infrastructure to build intelligent customer profiles and applications (Amperity), or cutting edge AI able to run efficiently in resource constrained edge devices (Xnor.ai).

Madrona remains deeply committed to backing the best entrepreneurs, in the Pacific NW, who are tackling the biggest markets in the world with differentiated technology and business models. Frequently, we find these opportunities adjacent to our specific themes where customer-obsessed founders have a fresh way to solve a pressing problem. This is why we are always excited to meet great founding teams looking to build bold companies.

Here are more thoughts and questions on our 4 core focus areas and where we feel the greatest opportunities currently lie. In subsequent posts, we will drill down in more detail into each thematic area.

Cloud Native Infrastructure

For the past several years, the primary theme we have been investing against in infrastructure is the developer and the enterprise move to the cloud, and specifically the adoption of cloud native technologies. We think about “cloud native” as being composed of several interrelated technologies and business practices: containerization, automation and orchestration, microservices, serverless or event-driven computing, and devops. We feel we are still in the early-middle innings of enterprise adoption of cloud computing broadly, but we are in the very early innings of the adoption of cloud native.

2018 was arguably the “year of Kubernetes” based on enterprise adoption, overall buzz and even the acquisition of Heptio by VMware. We continue to feel cloud native services, such as those represented by the CNCF Trail Map, will produce new companies supporting the enterprise shift to cloud native. Other areas of interest (that we will detail in a subsequent post) include technologies/services to support hybrid enterprise environments, infrastructure backend as code, serverless adoption enablers, SRE tools for devops, open source models for the enterprise, autonomous cloud systems, specialized infrastructure for machine learning, and security. Questions we are asking here include how the relationship between the open source community and the large cloud service providers will evolve going forward and how a broad-based embrace of “hybrid computing” will impact enterprise customer product/service needs, sales channels and post-sales services.

For a deeper dive click here.

Intelligent Applications with ML & AI

The utilization of data and machine learning in production has probably been the single biggest theme we have invested against over the past five years. We have moved from “big data” to machine learning platform technologies such as Turi, Algorithmia and Lattice Data to intelligent applications such as Amperity, Suplari and AnswerIQ. In the years ahead, “every application is intelligent” will likely be the single biggest investment theme, as machine learning continues to be applied to new and existing data sets, business processes, and vertical markets. We also expect to find interesting opportunities in services that enable edge devices to operate with intelligence, industry-specific applications where large amounts of data are being created like life sciences, services to make ML more accessible to the average customer, as well as emerging machine learning methodologies such as transfer learning and explainable AI. Key questions here include (a) how data rights and strategies will evolve as the power of data models becomes more apparent and (b) how to automate intelligent applications to be fully managed, closed loop systems that continually improve their recommendations and inferences.

For a deeper dive click here.

Next Generation User Interfaces

Just as the mouse and touch screen ushered in new applications for computing and mobility, new modes of computer interaction like voice and gestures are catalyzing compelling new applications for consumers and businesses. The advent of Alexa Echo and Show, Google Home, and a more intelligent Siri service have dramatically changed how we interact with technology in our personal lives. Limited now to short simple actions, voice is becoming a common approach for classic use cases like search, music discovery, food/ride ordering and other activities. Madrona’s investment in Pulse Labs gives us unique visibility into next generation voice applications in areas like home control, ecommerce and ‘smart kitchen’ services. We are also enthused about new mobile voice/AR business applications for field service technicians, assisted retail shopping (E.g., Ikea’s ARKit furniture app) and many others including medical imaging/training.

Vision and image recognition are also rapidly becoming ways for people and machines to interact with one another as facial recognition security on iPhones or intelligent image recognition systems highlight. Augmented and virtual reality are growing much more slowly than initially expected, but mobile phone-enabled AR will become an increasingly important tool for immersive experiences, particularly visually-focused vocations such as architecture, marketing, and real estate. “Mobile-first” has become table stakes for new applications, but we expect to see more “do less, but much better” opportunities both in consumer and enterprise with elegantly designed UIs. Questions central to this theme include (a) what ‘high-value’ new experiences are truly best or only possible when voice, gesture and the overlay of AR/VR/MR are leveraged? (b) what will be the limits of image (especially facial recognition) in certain application areas, (c) how effective can image-driven systems like digital pathology be at augmenting human expertise, and (d) how will multi-sense point solutions in the home, car and store evolve into platforms?

For a deeper dive click here.

DiPhy (digital-physical converged customer experiences)

The first twenty years of the internet age were principally focused on moving experiences from the physical world to the digital world. Amazon enabled us to find, discover and buy just about anything from our laptops or mobile devices in the comfort of our home. The next twenty years will be principally focused on leveraging the technologies the internet age has produced to improve our experiences in the physical world. Just as the shift from physical to digital has massively impacted our daily lives (mostly for the better), the application of technology to improve the physical will have a similar if not greater impact.

We have seen examples of this trend through consumer applications like Uber and Lyft as well as digital marketplaces that connect dog owners to people who will take care of their dogs (Rover). Mobile devices (principally smartphones today) are the connection point between these two worlds and as voice and vision capabilities become more powerful so will the apps that reduce friction in our lives. As we look at other DiPhy sectors and opportunities, one where the landscape will change drastically over the coming decades is physical retail. Specifically, we are excited about digital native retailers and brands adding compelling physical experiences, increasing digitization of legacy retail space, and improving supply chain and logistics down to where the consumer receives their goods/services. Important questions here include (a) how traditional retailers and consumer services will evolve to embrace these opportunities and (b) how the deployment of edge AI will reduce friction and accelerate the adoption of new experiences.

For a deeper dive click here.

We look forward to hearing from many of you who are working on companies in these areas and, most importantly, to continuing the conversation with all of you in the community and pushing each other’s thinking around these trends. To that end, over the coming weeks we will post a series of additional blogs that go into more depth in each of our four thematic areas.

Matt, Tim, Soma, Len, Scott, Hope, Paul, Tom, Sudip, Maria, Dan, Chris and Elisa

(to get in touch just go to the team page – our contact info is in our profiles)