Madrona hosted its second annual Intelligent Applications Summit on Oct. 11, bringing together roughly 250 founders, builders, investors, and thought leaders across the AI community. So much has changed in the space since our inaugural IA Summit twelve months ago, which was held just six weeks before the release of ChatGPT!

Last year, the key question at the Summit centered around how long it would take users to adopt intelligent applications and embrace AI. This year, that wasn’t even a question. AI is here, and every company is racing to figure out how best to use it.

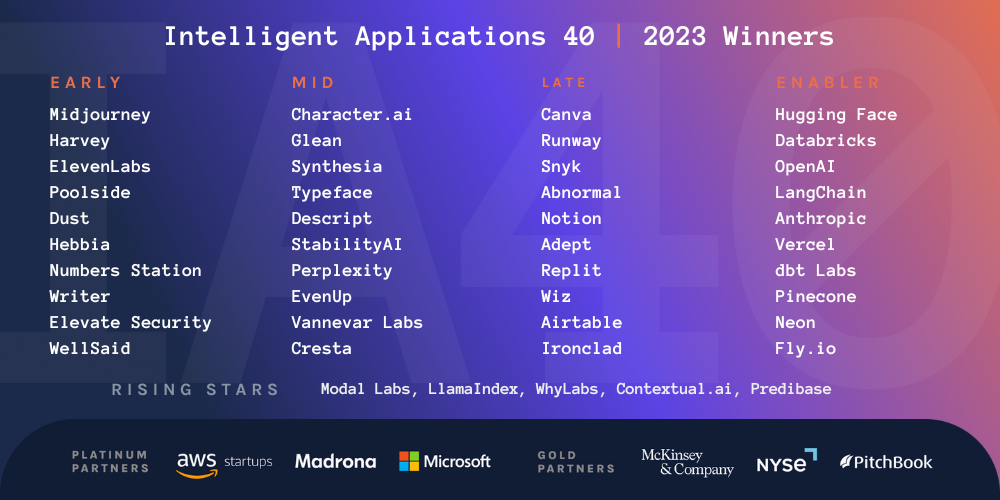

We were lucky to hear perspectives from a range of practitioners (and IA40 winners), including builders in AI infrastructure (e.g., LangChain, Unstructured, Pinecone, Neon), large public companies (e.g., Microsoft, Amazon, Salesforce, Goldman Sachs), research institutions (e.g., AI2, McKinsey), and cutting-edge applications (e.g., Typeface, Glean, Insitro), and many others.

Here are five key takeaways from the IA Summit

AI Has Gone Mainstream

To slightly rephrase Dinah Washington, what a difference a year makes!

In the twelve months since our first IA Summit in October 2022:

- ChatGPT was released. In just a few months, it became the fastest-growing consumer app of all time, helping its creator, OpenAI, generate $1B+ in annualized revenue.

- LangChain went from an idea to one of the fastest-growing open-source projects in history, with 65K+ stars on GitHub and spawning a commercial business with millions in funding.

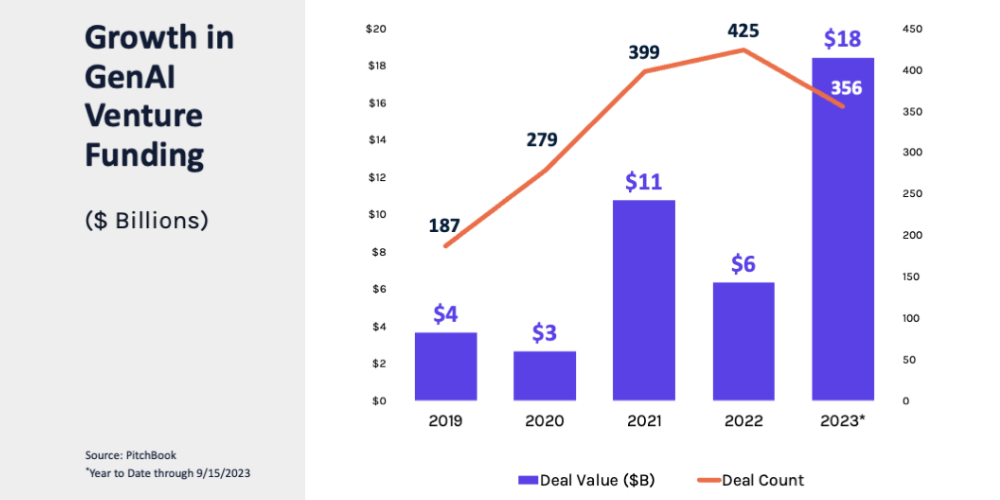

- Venture funding in Generative AI went from $6B in 2022 to $18B in 2023 — as of Sept. 15, 2023.

- Microsoft, Google, Salesforce, Amazon, and even Apple have all jumped into the fray, either through their own products or aggressively funding and partnering with emerging startups and foundation models.

Needless to say, the tone of this year’s IA Summit was markedly different from a year ago — nobody was questioning if consumers would “accept” AI, but rather how best could companies and users capture its immense value.

RAG is Hot

One topic that was on everyone’s lips was retrieval augmented generation, or RAG, which is a framework for improving the quality of large language model generated text by grounding the model on external sources of knowledge. It is essentially a technique for using data specific to an enterprise or user to train a model and helps the model to “look up” external information to improve its responses. RAG is different than fine-tuning — the process of taking a pre-trained LLM and further training it on a smaller, specific dataset to adapt it for a particular task or to improve its performance.

RAG is certainly having its moment. In a panel on emerging architectures for building generative apps, Harrison at LangChain, Brian at Unstructured, and Luis at OctoML discussed the pros and cons of RAG and its rising popularity among AI developers, particularly because it is less expensive than fine-tuning and more generalizable. Another discussion topic was the ability to train an LLM, and then use another LLM for re-training based on specific use cases.

It is clear that there is no definitive standard yet. Companies of all sizes are stitching together several different techniques to optimize model performance.

Everyone Will Have Their Own App

Nvidia CEO Jensen Huang said in an interview in March 2023 that five million applications are in app stores, probably several hundred PC applications, and maybe 500,000 websites that matter.

“How many applications are there going to be on large language models? I think it will be hundreds of millions, and the reason for that is because we’re going to write our own! Everybody’s going to write their own,” he said.

We heard similar sentiments throughout the IA Summit. We have gone from a world with hundreds of apps during the PC and Internet days, to a world with millions of apps in the mobile era. It is not a massive stretch to believe that we are now entering a world where apps built on LLMs can be customized to the needs and wants of individual users. Imagine an app that suggests recipes tailored to you, using a model trained on your restaurant history, dietary restrictions, and taste preferences…and then multiply that for every picky foodie out there.

Nikita Shamgunov, CEO of Neon, reiterated this point when he described that part of Neon’s mission is to broaden the number of developers from ~25M today to 50M+ by 2030, a necessary force for a world with “hundreds of millions” of apps!

Jason Warner, CEO of Poolside, also shared a vision where we will be able to easily have millions of code-generated applications.

Open Models are Gaining Steam

Ali Farhadi, formerly the founder of Xnor.ai (acquired by Apple in 2020) and currently the CEO of the Allen Institute for AI (AI2), led a keynote on “The State of Open-Source Models & the Path to Foundation Models at the Edge,” where he passionately made the case for open models. Open-source AI models allow anyone to view and manipulate code and are growing in popularity as startups and giants alike race to compete with private model players like OpenAI (confusing names…we know). With powerful open-source models like Meta’s Llama-2 and Mosaic’s MBT-7B, the ability for developers to quickly get up and running and create new applications is becoming easier and easier.

However, this is not to say that there isn’t a need for closed-source models. The most popular AI tool remains ChatGPT, and we will continue to see a proliferation of closed models from Anthropic, Google, and others, particularly due to their ability to move quickly and spend enormous resources to better train their models. There will continue to be a balancing act between a closed stack driving value capture, and an open stack unlocking broad innovation.

Early Stage Companies Are as Dynamic As Ever

One of the most interesting insights during this year’s IA Summit came from comparing 2023’s list of IA40 winners (the top 40 private companies building AI apps as voted by a group of VCs) with the prior two lists. Twenty-nine of the 40 companies this year are first-time winners, and only seven are three-peat winners (Cresta, Runway, Snyk, Abnormal, HuggingFace, Databricks, and dbt). In fact, the highest vote-getter in 2022 (Jasper) didn’t even appear on this year’s list.

This just goes to show how incredibly dynamic the space is. There are new companies being formed at every level of the stack, from the foundation model level to enabler to application, and the speed at which they can gain thousands of users and millions of dollars is unprecedented (Midjourney being a great example).

We are also watching to see how the generative-native vs. generative-enhanced debate continues to play out. Generative-enhanced companies have the distribution, data, and network effects, but can the generative-native companies re-imagine a workflow and gain new customers to adopt the technology? Assuming the generative-enhanced companies win in the short term, the generative-native companies will be even more dynamic, further highlighting how many of these early-stage companies may no longer be around in a number of years.

We fully expect a new crop of winners in the 2024 list, and we are excited to watch the incredible developments in the market!

Watch all the session recordings here.