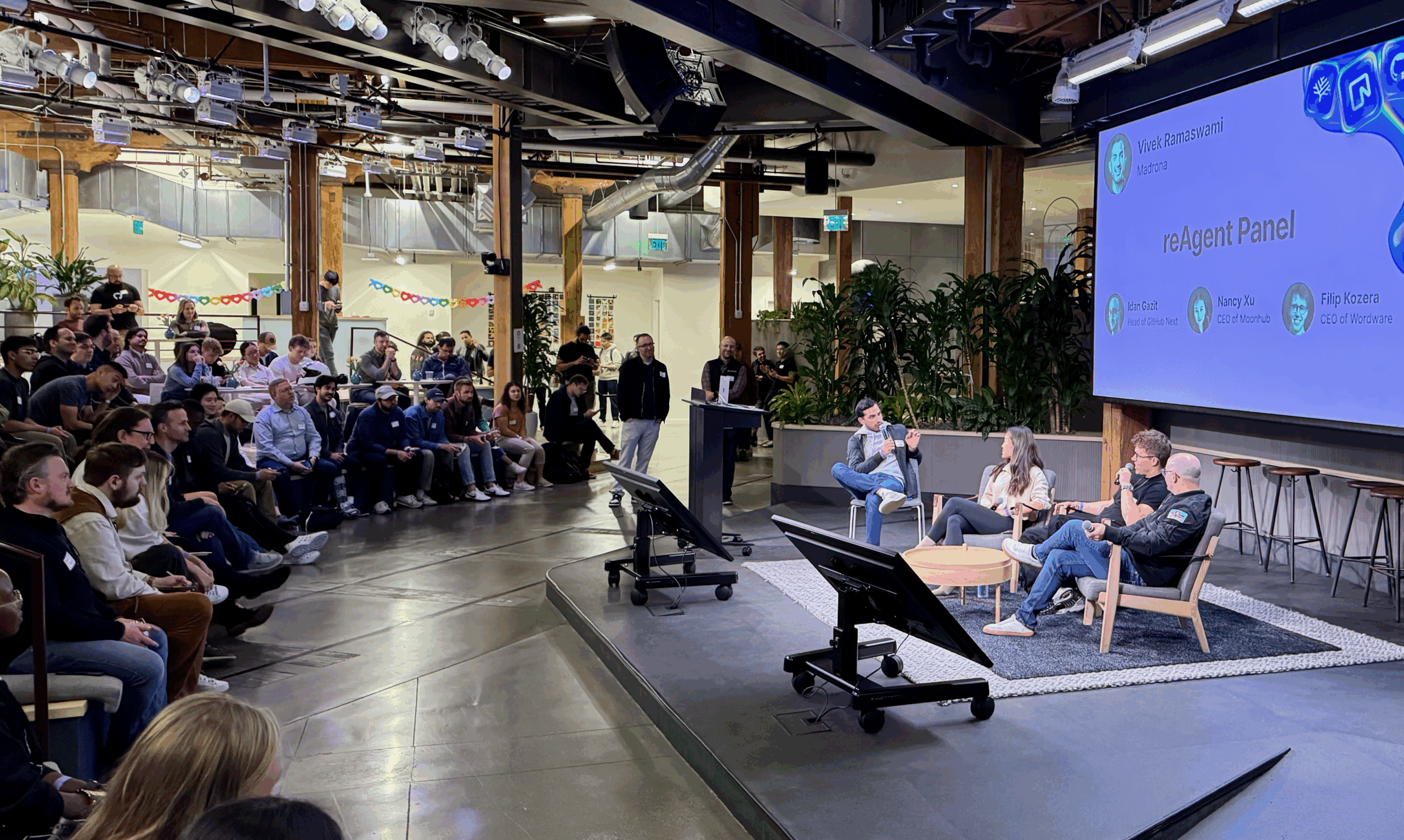

A few weeks ago, we hosted reAgent, an invite-only gathering of 150+ AI agent builders at GitHub HQ. The speaker lineup included Nikita Shamgunov (CEO of Neon, now acquired by Databricks), Nick Turley (Head of Product, ChatGPT), Idan Gazit (Head of GitHub Next), Nancy Xu (CEO of Moonhub, now acquired by Salesforce), Filip Kozera (CEO of Wordware), Matan Grinberg (CEO of Factory), Eric Newcomer (CEO of Newcomer), and my Madrona colleague Vivek Ramaswami.

The conversations were rich, the technical discussions were deep, and with a few weeks of reflection, three themes emerged that stretch beyond surface-level excitement.

-

- First, we need to graduate from “some” reasoning to a more useful caliber of reasoning in order to address “reasoning starved” domains.

- Second, we’re all becoming “agent managers,” our roles at work and even in our daily lives will require new skills (planning, delegation, auditing, even hiring/firing) that feel today like executive skills.

- Third, all of this is putting stress on the infrastructure stack of today, and we need new infrastructure to support it.

But before we go back a few weeks to reAgent, let’s go back a few years further to my high school physics class (I promise this connects!).

The Physics Teacher’s Dilemma

My high school physics teacher, Mr. Dougherty, had a grading system that I think perfectly captures where we are with AI agents today. On exam problems requiring complex reasoning, he awarded points like this:

-

- 1 point: Any kind of reasoning

- 2 points: The right reasoning

- 3 points: The right reasoning with the right answer

This was brilliant teaching. It taught us to think fearlessly even when we couldn’t see the full path ahead. It rewarded the process of grappling with hard problems, not just getting lucky with the final answer. That grading curve was a major contributor to how I approach complex problems today.

But here’s the thing: I believe our collective view of agentic reasoning is currently stuck at the “1 point” standard. We celebrate agents that show any kind of reasoning, including viral videos of code generation, impressive but inconsistent task completion, and clever but unreliable problem-solving attempts.

And that’s fine for a technology in its infancy. Just like Mr. Dougherty’s generous curve was perfect for high school students learning to tackle physics problems for the first time.

But if agents are going to tackle the world’s toughest challenges, the problems that really matter, we need to get them to a much higher standard. We need agents that consistently demonstrate the right reasoning and arrive at the right answers. Because in domains like healthcare, finance, law, and engineering, “any kind of reasoning” isn’t just insufficient. It can be dangerous.

Theme 1: Intelligence-Constrained Domains Are Where the Real Opportunity Lives

This connects directly to one of the most important insights from our event. Nick Turley from OpenAI made a crucial distinction that I think deserves much more attention. He talked about focusing on domains that are “useful and intelligence constrained” – areas where the limiting factor isn’t technology or resources, but pure reasoning capability.

As Nick put it: “It’s easy to find examples of hard problems that are academically hard, but they’re not that useful solved. Science is a great example of something that is very useful and intelligence constrained.”

Think about what this means. We’re not talking about automating simple tasks or replacing basic workflows. We’re talking about augmenting human intelligence in areas where more reasoning power directly translates to better outcomes for society.

Healthcare: Personalized treatment protocols, complex diagnostic reasoning, drug interaction analysis. These aren’t just intellectually challenging; they’re literally life-and-death decisions where better reasoning saves lives.

Finance: Risk modeling, market analysis, fraud detection. More intelligent reasoning here doesn’t just improve returns — it can prevent financial crises and protect people’s savings.

Law: Case analysis, contract review, regulatory compliance. Better legal reasoning can make justice more accessible and consistent.

Engineering: System design, failure analysis, optimization problems. More sophisticated reasoning can make our infrastructure safer, more efficient, and more sustainable.

Public Policy: Evidence synthesis, scenario modeling, stakeholder analysis. Better reasoning in governance could help us tackle climate change, inequality, and other systemic challenges.

The pattern is clear: these are domains where human experts are already stretched thin, where the problems are getting more complex, and where the cost of poor reasoning is enormous. They’re also domains where even modest improvements in reasoning capability can create massive value.

But here’s what makes Nick’s insight so important: these domains require agents that operate at the “3 point” level of Mr. Dougherty’s scale. They need the right reasoning and the right answers, consistently and reliably. “Any kind of reasoning” simply won’t cut it.

This is why the current focus on flashy demos and viral use cases, while exciting, may be missing the bigger picture. The real transformation happens when we can deploy agents in these intelligence-constrained domains with confidence. When a doctor can trust an AI agent to help with complex treatment decisions. When a financial analyst can rely on an agent for sophisticated risk modeling. When an engineer can delegate critical system design work to an AI colleague.

Theme 2: We’re All Becoming Agent Managers

Which brings me to another key insight from our event: we’re all about to become “agent managers,” as Nancy Xu from Moonhub/Salesforce pointed out. As the capabilities of AI agents improve and we start deploying them in these high-stakes, intelligence-constrained domains, our role as humans fundamentally changes.

We won’t be writing code line by line. We’ll be defining objectives and reviewing agent-generated solutions. We won’t be conducting research step by step. We’ll be setting research directions and synthesizing agent-generated insights. We won’t be analyzing data point by point – we’ll be framing questions and interpreting agent-generated analysis.

This shift requires a completely different skill set. As Nancy Xu from Moonhub/Salesforce astutely observed during our panels, “Most people are just not very good at telling an agent what they want… most people who are using agents have never been managers before, so they’re just not very good at delegating.”

The humans who will be most successful in this agentic future are those who excel at:

-

- Objective setting: Clearly defining what success looks like

- Context provision: Giving agents the right background and constraints

- Quality assessment: Quickly evaluating whether agent output meets standards

- Iterative refinement: Guiding agents toward better solutions

- Risk management: Knowing when to intervene or redirect

In essence, the future belongs to those who are best at collaborating with both people and agents alike.

Theme 3: From Toys to Tools Requires New Infrastructure

One theme that emerged repeatedly throughout our discussions was the gap between impressive prototypes and production-ready systems. As Idan Gazit from GitHub Next put it: “It’s really easy to make toys. And it’s really hard — all the bodies are buried in the process of taking toy to tool.”

This is particularly acute in those intelligence-constrained domains we discussed. A coding agent that works 70% of the time might be useful for prototyping. But a healthcare agent that’s wrong 30% of the time could be lethal.

The infrastructure to support reliable, trustworthy agents in high-stakes domains is still being built. We need better tools for:

-

- Verification and validation of agent reasoning

- Audit trails for agent decision-making

- Rollback mechanisms when agents make mistakes

- Human oversight integration that doesn’t slow everything down

- Quality assurance systems for agent outputs

This infrastructure buildout represents massive opportunities for founders. The companies that solve the reliability problem (that help agents graduate from Mr. Dougherty’s “1 point” to “3 points”) will enable the transformation of those intelligence-constrained domains.

The Future Isn’t Happening Fast Enough

We have urgent problems that require our best thinking: climate change, healthcare costs, financial inequality, and infrastructure decay.

The promise of AI agents in intelligence-constrained domains is that we might finally be able to accelerate progress on these challenges and make the future happen faster. Not by replacing human judgment, but by augmenting human reasoning at scale. By giving every doctor access to the reasoning power of the world’s best diagnosticians. By giving every financial analyst the modeling capabilities of the top quantitative researchers. By giving every engineer the optimization insights of the most experienced designers.

But only if we can get agents from “any kind of reasoning” to “right reasoning with right answers.”

What This Means for Builders

If you’re building in the agent space, the intelligence-constrained domains represent both the highest impact and the highest bar for success. These are markets where customers will pay premium prices for tools that demonstrably improve outcomes, but they require a level of reliability and trustworthiness that most current agents can’t provide.

The opportunity is enormous precisely because the standards are so high. If you can build agents that doctors trust, that financial analysts rely on, that engineers delegate to – you’re not just building a product, you’re expanding the reasoning capacity of entire professions.

At Madrona, we’re actively looking for founders who are tackling these challenges. Not just building better demos, but building the infrastructure, tools, and applications that will enable the transition from toy agents to trusted AI colleagues.

The future of reasoning-augmented humans isn’t just about making individuals more productive. It’s about unlocking our collective intelligence to solve problems that matter. And that future starts with getting our agents to a “3 point” standard.

If you’re building toward that future, we’d love to hear from you at [email protected]. You can also contact me to be included at the next reAgent event.

The reAgent event was made possible by our incredible speakers and the GitHub team that hosted us. Special thanks to everyone who shared insights that night – some of the best conversations happened after the panels ended.