I’m thrilled to share Madrona’s investment in Axiom, a company building advanced reasoning models trained on mathematical proofs. The world’s most pressing challenges, from drug discovery to energy independence, aren’t bottlenecked by data or computing power. They’re bottlenecked by reasoning capability itself.

Current AI reasoning is remarkable but constrained. It’s designed to sound human, not think rigorously. In domains where correctness matters most, like finance, engineering, and scientific discovery, that gap becomes critical. Meanwhile, the sophisticated reasoning these fields demand is itself bottlenecked by human bandwidth. We simply don’t have enough people capable of the rigorous thinking required to tackle these intelligence-constrained problems at scale.

This is a key accelerant to what we at Madrona have called the “Reasoning Revolution.” It represents the shift from AI systems that simply perform tasks to reasoning machines that collaborate with humans to plan, decide, and act. Axiom is building the advanced reasoning capabilities backed by mathematical rigor that reasoning machines require.

It takes an outlier founder like Carina Hong, who has pushed herself to the frontiers of mathematics since childhood, to tackle this mission with the ambition and aptitude required to seize it.

Mathematical Truth as Training Foundation

Axiom’s insight is profound. To build models capable of rigorous reasoning, you need to train them on rigorous, verifiable reasoning, not examples optimized for human preference. Mathematical proofs expressed in specialized languages like LEAN provide exactly this foundation. Models trained on verified proofs perform remarkably well not just in pure mathematics, but across domains requiring rigorous logical thinking.

Unlike typical training data that includes reasoning of varying quality, mathematical proofs provide an unambiguous foundation. This represents a fundamentally different approach. It prioritizes rigorous mathematical thinking over human preference. The major labs have shown this approach’s promise, but Carina and Axiom recognize that this shift is so tectonic it requires ground-up thinking, not incremental improvements to existing architectures.

The opportunity this unlocks is enormous. We live in a world of intelligence scarcity in critical domains, and early market conversations confirm strong appetite for these capabilities.

Scientific Discovery: The bottleneck isn’t equipment or funding, but the reasoning capability needed to formulate novel hypotheses and synthesize insights across multiple disciplines. Major breakthroughs increasingly require connecting knowledge from physics, chemistry, biology, and mathematics in ways that exceed individual human expertise.

Quantitative Trading: Elite quantitative funds face a fundamental constraint in alpha discovery. They cannot find enough people capable of the advanced mathematical reasoning required to develop and validate sophisticated trading strategies. The limiting factor isn’t capital, data, or computing power, but human reasoning capacity itself.

Software Verification: Critical systems failures cost billions because we can’t find enough experts to formally verify complex code behavior. This challenge intensifies as AI writes more code and domain experts “vibe code” their ideas into software, making rigorous verification more important than ever.

Legal Reasoning: The complexity of modern contracts and regulations outpaces human capacity to analyze logical dependencies and contradictions. Legal teams need tools that can provide mathematical certainty in contract analysis and identify hidden conflicts across thousands of pages.

These are “intelligence-constrained” domains. The limiting factor isn’t technology or resources, but reasoning capability itself. Even modest improvements in reasoning power create massive value. As AI increasingly generates code, contracts, and analysis that humans don’t fully understand, the need for advanced reasoning becomes critical. Axiom’s models could provide the crucial layer of rigorous reasoning and mathematical certainty across industries where correctness matters.

The Limits of Human Preference Training

These models are remarkable achievements, but they face a specific challenge in high-stakes domains. They’re optimized to align with human preferences, which studies show often favor responses with praise and engagement over pure rigor. Reinforcement Learning on Human Feedback (RLHF) naturally rewards models for producing outputs that “feel right” to human reviewers.

This creates a subtle but important gap for domains requiring the highest levels of rigor. Training data includes chain-of-thought examples of varying quality, from rigorous to informal. The result is models that reason exceptionally well across most contexts but face constraints in fields where even small errors have major consequences.

The major labs have achieved remarkable breakthroughs in mathematical reasoning as a gateway to broader reasoning. The foundational work began with François Charton (now a founding member of technical staff of Axiom) and Guillaume Lample’s Deep Learning for Symbolic Mathematics in 2019, which for the first time applied transformers to mathematical reasoning before the LLM era even began. OpenAI built on this with their 2022 work on formal mathematics, demonstrating that language models could prove Olympiad-level theorems.

The era-defining moment came in 2024 with Google DeepMind’s AlphaProof, which achieved silver medal performance at the International Mathematical Olympiad by combining language models with formal verification. This year, both OpenAI and Google DeepMind achieved gold medal performance. These demonstrations occurred years earlier than experts predicted.

But here’s the key insight. If we’re celebrating success on problems designed for talented high schoolers, imagine what becomes possible when AI can reason through the ambiguous, multi-layered challenges that define expert-level work. A new generation of founders has spotted the opportunity to go radically further by starting from mathematical first principles.

From Formal Methods to AI Reasoning

During my time at AWS, we used formal methods extensively to verify complex systems for security and billing. Amazon invested heavily in this area, hiring hundreds of experts to guarantee correctness in everything from security policies to billing systems.

In my investing role, I hadn’t seen much application of formal methods by new companies. It required substantial resources and deep expertise. But in the past 24 months, this historically quiet area of computer science has awakened. The maturity of verification technologies like LEAN, combined with advances in GenAI, has created a new opening. Advanced AI reasoning is by no means cheap or easy, but we can now bet ambitiously on applying formal methods for dramatically better reasoning at scale. The opportunity is to marshal decisive resources, both capital and rare talent, toward companies building on this foundation.

These discussions inevitably led me to Axiom.

Why This Team, Why Now

Carina Hong is a 24-year-old polymath who is curious about what’s possible and utterly uninterested in what’s typical. Her Morgan Prize recognition noted the 9 research papers she wrote in her three years at MIT, spanning number theory, combinatorics, and probability. She has consistently pushed herself to the frontiers of mathematical reasoning and even legal reasoning. What strikes me most after months of working with her is her tenacity and grace to pursue durable problems with ambitious technology.

Carina has recruited a world-class founding team to match that ambition. CTO Shubho Sengupta built and led Meta FAIR’s Garage team that created OpenGo, FastMRI, and CrypTen, and before that worked under Andrew Ng at Baidu. Shubho’s distributed training work is the foundation of how neural networks are trained today. François Charton pioneered applying transformers to hard mathematical problems in 2019, before ChatGPT even existed, and in 2024 solved a hundred-year-old open problem while disproving a 30-year-old conjecture. Hugh Leather pioneered deep learning for code generation in 2016 and has since built the first LLMs for compilers and GPU code generation, including recent flagship work on computational world models that Yann LeCun has highlighted.

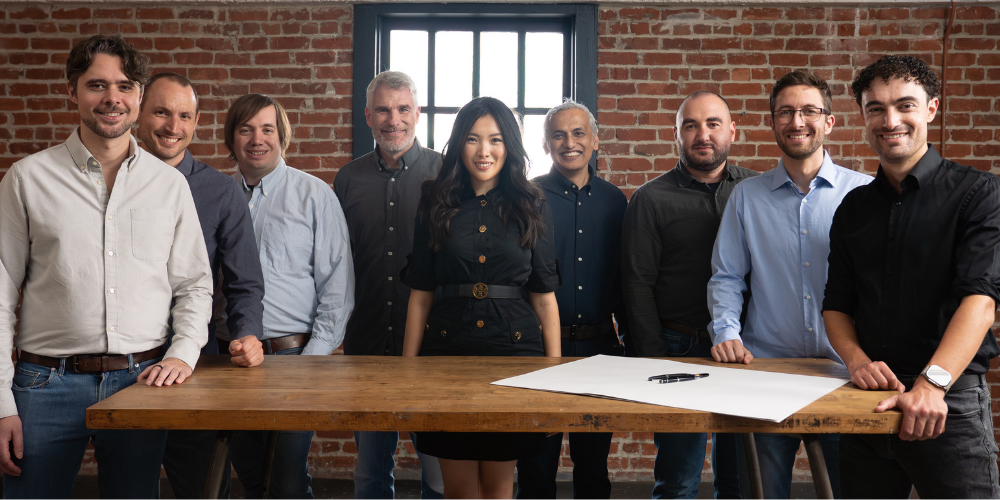

In just a few months, Axiom has assembled an extraordinarily talent-dense team across the three pillars required to build what they envision. Those pillars are AI, mathematics, and programming languages. This group has collectively done decades of foundational work to bring their respective domains to this pivotal moment. The fact that Axiom built such a team in today’s competitive AI hiring climate demonstrates not only the compelling nature of their vision, but this is a founding team capable of addressing the world’s reasoning bottleneck.

Our Bet on Axiom

Axiom represents something rare. It offers a clear technical path to significantly advance reasoning capabilities, led by a founder and team uniquely positioned to execute that vision. By training on mathematical proofs rather than approximating human preference, they’re building models capable of rigorous conjecture, deduction, and verification at the highest standard.

We’re thrilled to support Carina and the Axiom team as they push the frontier of AI reasoning. We’re also pleased to co-invest in Axiom together with B Capital, Greycroft, and Menlo Ventures. Axiom’s work won’t just make models smarter. It will make them capable of the rigorous thinking needed to tackle intelligence-constrained problems that matter most.

Learn more about Axiom’s approach to advanced AI reasoning at https://axiommath.ai. If you’re building in formal methods or AI reasoning, we’d love to hear from you at [email protected]. And if you’re interested in joining Carina and the team to work on these problems, reach out to [email protected].