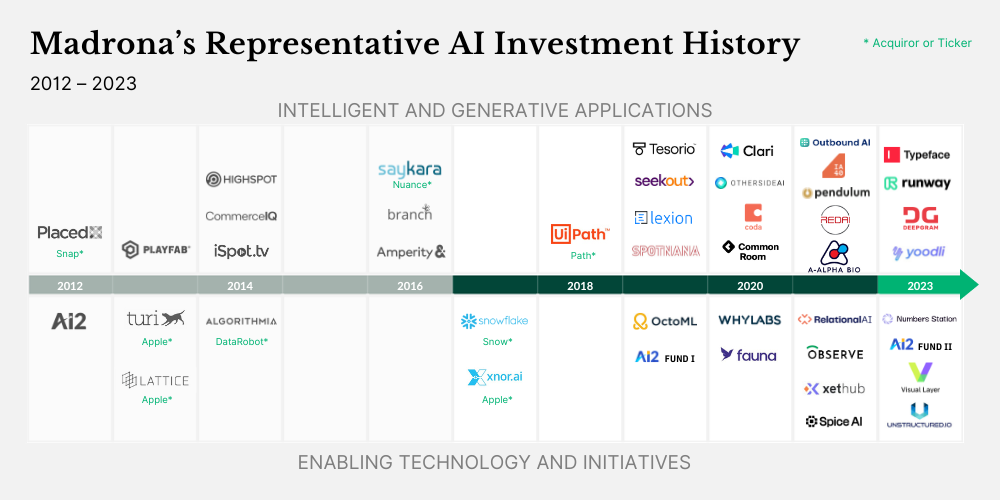

There is no doubt that generative AI is consuming the minds of entrepreneurs, venture capitalists, and many incumbent technology players. The excitement for this massive wave of disruption seems to be building exponentially in the Seattle area (home of Microsoft and Amazon), San Francisco, and beyond. As investors in the intelligent and generative application sector for a decade, Madrona continues to advance our perspective on the rapidly changing generative app ecosystem and the game theory around which types of companies are more likely to win at various layers of the generative AI stack.

Heads Or Tails

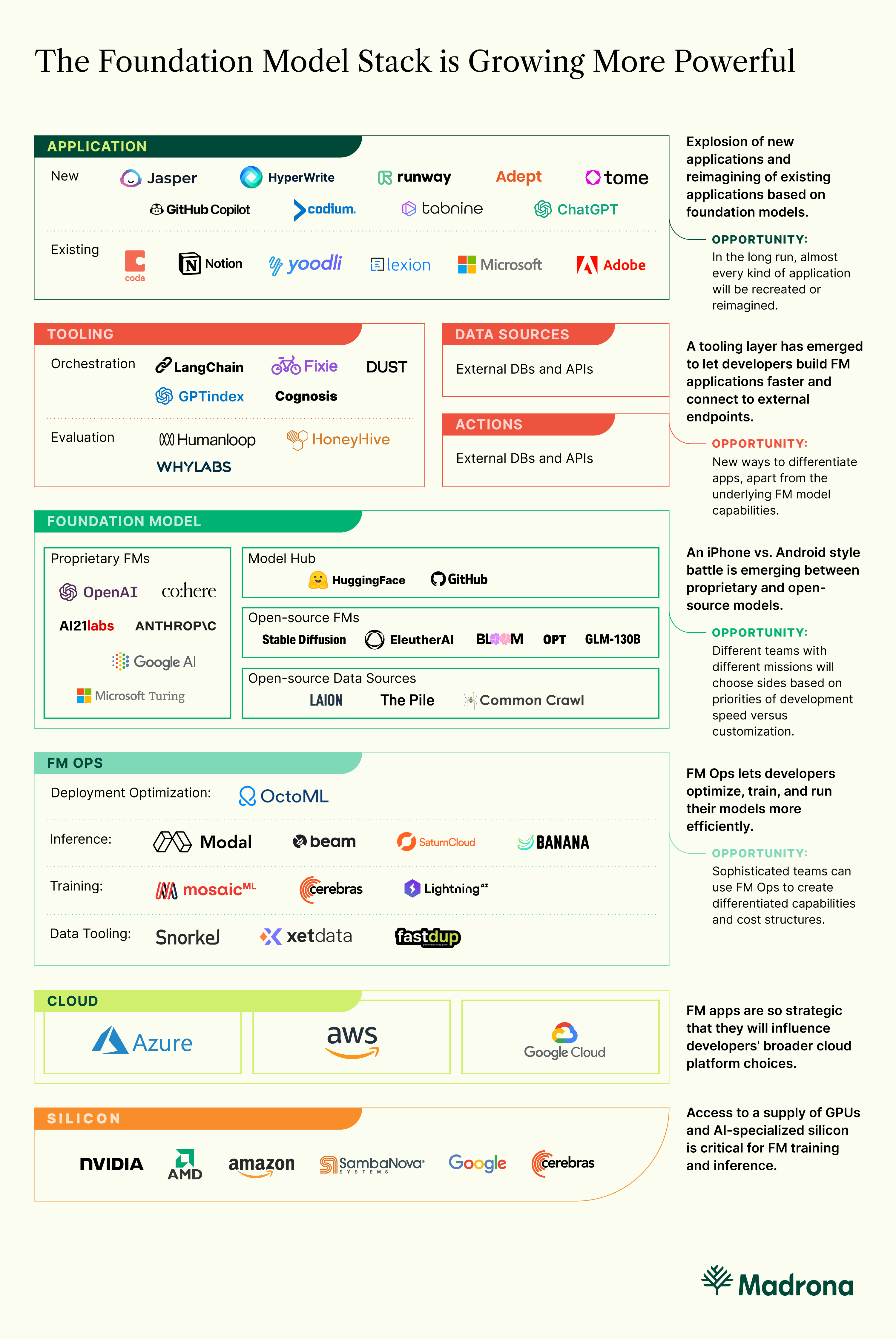

Let’s start at the bottom of the stack. Foundation models like GPT-4 and Stable Diffusion are increasing their profiles in the developer world. A considerable debate has emerged around whether there will be a few models to “rule them all” or many models. We believe that many models will prevail, but there will be BOTH head models and tail (as in long-tail) models that deliver value. Larger (head) models, with many billions of parameters and tokens, will be expensive to build and potentially expensive to use, and they tend to be on the leading edge of the emergent properties made possible by their massive size and scale. These large models will become more sophisticated (multi-modal, less “confidently wrong”) and will often be necessary to help build more targeted generative apps. But they will often NOT be sufficient.

Model “cocktails” are more likely to emerge that combine head models, tail models, and even knowledge resources. Tail models will access deep, domain-specific data and focus on specific problems that need higher accuracy and context. For example, DeepMind, The Institute of Protein Design, and Facebook have built longer-tail protein structure models to leverage generative capabilities around protein structure and function design. Likewise, Microsoft Copilot was built by leveraging both the GPT capabilities of OpenAI and a specialty code writing model trained on GitHub data — a similar approach was taken with the recently announced Microsoft 365 Copilot.

Combination models are likely to be commonplace (in fact, many foundation models are already ensembles of multiple models). But, that desire to combine the capabilities of various models to solve a more specific task leads to complexity at the models/knowledge resource integration layer, leading us to another layer of the generative AI stack.

Monkey in the Middle

Every technology platform shift has birthed a next generation of middleware winners, and generative AI middleware will be no exception. Historic shifts like client/server, Internet, SaaS, and Cloud have led to companies like BEA, Tibco, Mulesoft, Apigee, Zapier, and others. A group of generative middleware companies is starting to emerge with names like Langchain, Fixie, and Dust, and we expect this layer to expand in importance with contributions from startups and incumbent software companies.

What do these middleware services do today? Their capabilities include chaining, prompting, agents, enhancing (with other data sources), actioning (with agents), persisting, and evaluating. They help generative app creators build apps that can access and integrate multiple models and knowledge sources, optimize prompting and chaining needs, and implement something an app generates. Early on, these integrations and services will be more “up and down” the stack between the generative apps and the foundation models, but quickly there will be horizontal integrations via APIs to other data stores and generative services.

The utility of these and other middleware services will evolve as the underlying foundation model architectures and the generative apps become more sophisticated. Prompt engineering, for example, may become less important while the breadth and depth of generative “agents” are going to dramatically increase. Middleware will also help enable enterprise-ready capabilities that give the app creators the confidence to truly automate a service – including the generative actions – without humans in the loop. All builders, including native AI companies, existing software companies, and incumbent industry players, will be trying to figure out how to leverage the generative AI stack. There are many strategic choices to consider when building generative apps, including what to build yourself at the various layers of the generative AI stack, how to leverage your customers and data, and how to partner with other technology providers.

Generative Native or Generative Enhanced?

Compelling generative native applications are already emerging in sectors like writing, images, video, and code creation. Companies like Jasper.ai, Copy.ai, Otherside, and, of course, OpenAI’s ChatGPT have gone the native route, supporting a wide variety of writing use cases and getting customers to pay them for these services. RunwayML is an early leader in the video creation and editing market and is fortunate to have a team that deeply understands the generative AI stack. They even contributed to the movie “Everything Everywhere All at Once,” which won seven Oscars this year. We believe generative-native companies are taking a fresh look at a customer’s problem, the technological capabilities available to create a better solution, and the organizational attributes to build and deliver those applications. As Tomasz Tunguz thoughtfully shared recently, building, deploying, and operating generative apps will create product development and organizational and cultural changes.

But, established companies have strengths in deep customer relationships, established workflows, and user experiences and data repositories that could power targeted and tailored generative models. These software businesses and enterprises have built their own custom applications over the years and need to decide whether to build generative-native apps or enhance their existing software applications with embedded generative capabilities. If they choose to use enhanced rather than native, what types of models, knowledge stores (essentially data and metadata sources), and middleware are best for them to use in extending the value of their software?

We believe many incumbents will choose the generative enhanced path and combine fine-tuned versions of larger foundation models with their own data and workflow capabilities, similar to how ISVs leverage the major cloud service providers. They will also want to build or access long-tail models that help them enhance their existing apps. They can bootstrap these capabilities by finding base models on HuggingFace and utilizing model optimization solutions like OctoML to accelerate their time to market. This will enable them to rapidly and iteratively extend generative capabilities embedded in their software to their customers. We believe every incumbent company should be evaluating how to leverage generative AI by thinking strategically about its impact on their business and acting empirically to learn how customers and partners want them to apply these transformative capabilities.

Who Captures the Flag?

The generative AI stack has become clearer with the combination of foundation models, generative middleware, and a mix of gen-native and enhanced apps. It is also clear to us that substantial value will be created and captured in this massive wave of innovation and disruption. Just look at the productivity gains Github is highlighting by the use of Copilot or the creativity gains that individuals are experiencing from using ChatGPT or MidJourney.

What is not as clear is which layer(s) of the stack or which solutions across the stack will capture the most value. There are also questions about data rights to train models and innovative approaches to user engagement and experience models with generative apps that create new data and enable reinforcement learning from human feedback (RLHF).

Plenty of game theory lies ahead for the companies active in the generative AI stack. What will large technology companies like Amazon, Google, and Meta focus on as they increase their generative AI efforts? How will incumbent software companies like Workday, Salesforce, or Smartsheet embrace the embedded generative AI opportunities? Which layers of the stack will attract more founders and venture dollars to build the gen-native disrupters? How will the various layers of the stack evolve as the models, middleware, and applications themselves become more capable and pervasive? Which specific companies will execute in ways that enable them to create a durable flywheel leveraging RLHF and more? We agree with Kirk Materne from Evercore’s assertion last week in an analyst report:

“The bottom line in our view is that the continued advances in LLMs is going to create more opportunities for software vendors to embed AI functionality that combines 1st party data with generative AI in order to create new insights and increased productivity for customers.”

While AI has been around for decades (just as the internet had been around for decades before the mosaic browser), we are just at the beginning of the generative AI revolution. And, as technology leaders like Satya Nadella, Andy Jassy, and Sam Altman have already shared in various forums, it will be a source of innovation and disruption for a long time to come. As venture investors and company builders engaged in the applied AI world for the past decade, we are energized by the competition between incumbents and startups that lies ahead. So, game on!