“We always overestimate the change that will occur in the next two years and underestimate the change that will occur in the next ten. Don’t let yourself be lulled into inaction.” – Bill Gates, The Road Ahead

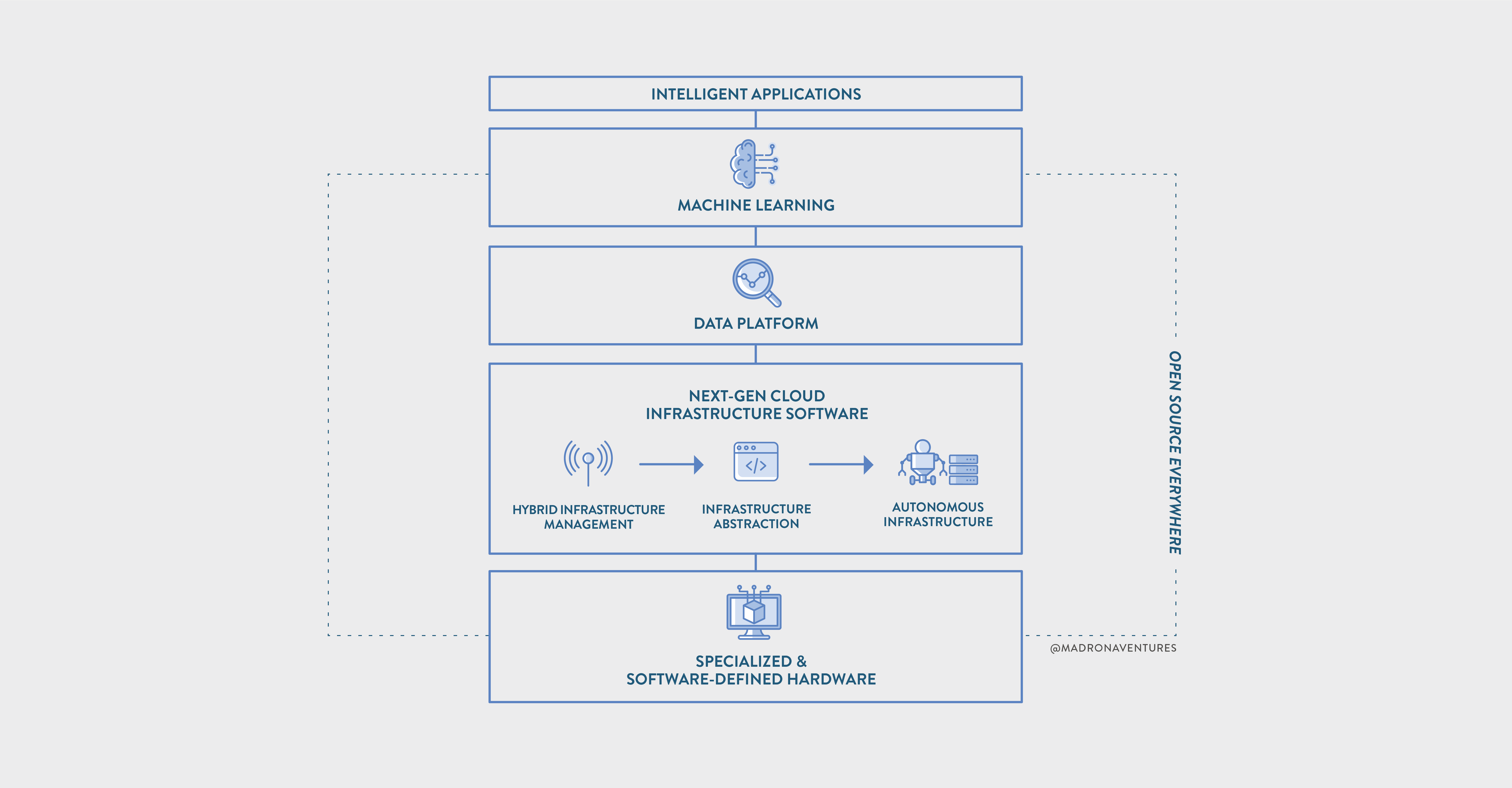

Just over a year ago, we wrote about how enterprise infrastructure was being reimagined and remade, driven by the rapid adoption of cloud computing and rise of ML-driven applications. We had postulated that the biggest trends driving the next generation of enterprise software infrastructure would be (a) cloud-native applications across hybrid clouds, (b) abstraction/automation of infrastructure, (c) specialized hardware and hardware-optimized software, and (d) open source software.

Since then, we have witnessed several of those trends accelerating while others are taking longer to gain adoption. The COVID-19 pandemic over recent months, in particular, has arguably accelerated enterprises multiple years down evolutionary paths they were already on – digital transformation, move to the cloud for business agility, and a perimeter-less enterprise. Work and investments in these areas have moved from initiatives to imperatives, balanced with macroeconomic realities and the headwind of widespread spending cuts. Against that backdrop, today we again take stock of where next-generation infrastructure is headed, recapping which trends we feel are accelerating, which are emerging, and which are stalling – all through the lens of customer problems that create opportunities for entrepreneurs.

Next-generation enterprise infrastructure, as we show in the figure above, will be driven by major business needs including usability, control, simplification, and efficiency across increasingly diverse, hybrid environments and evolve along the four dimensions of (1) cloud native software services, (2) developer experiences, (3) AI/ML infrastructure, and (4) vertical specific infrastructure. We dive into these four areas, and their respective components, in the rather lengthy post below. We hope some of you will read the whole thing, and others can jump to their area of interest!

As we have noted in the past, below are a few “blueprints” as we look for the next billion-dollar company in enterprise infrastructure. As we continue to meet with amazing founders who surprise and challenge us with their unique insights and bold visions, we continue to refine and recalibrate our thinking. What are we overlooking? What do you disagree with? Where are we early? Where are we late? We’d love to hear your thoughts. Let’s keep the dialogue going!

Cloud Native Software and Services

Cloud native technologies and applications continue to be the biggest innovation wave in enterprise infrastructure and will remain so for the foreseeable future. As 451 Research and others point out, “… the journey to cloud-native has been embraced widely as a strategic imperative. The C-suite points to cloud-native as a weapon it will bring to the fight against variables such as uncertainty and rapidly changing market conditions. This viewpoint was born prior to COVID-19 – which brings all those variables in spades. As this crisis passes, and those who survive plan for the next global pandemic, there are many important reasons to include cloud-native at the core of IT readiness.”[1]

However, enterprises that have begun to adopt technologies such as containers, Kubernetes, and microservices are quickly confronted with a new wave of complexity that few engineers in their organization are equipped to tackle. This is producing a second wave of opportunity to ease this adoption path.

Hybrid and Multi-cloud Management

We highlighted last year that we are now in a “hybrid cloud forever” world. Whether workloads run in a hyperscale public cloud region or on-premises, enterprises will adopt a “cloud model” for how they manage these applications and infrastructure. We are seeing the forces driving such multi-site and multi-cloud operations continuing to accelerate. While AWS remains the leader, both Azure and Google are adding new data centers around the world and expanding support for on-premises applications. Azure has gained significant ground with a growing number of services that are production-ready, and Google has invested heavily in expanding their enterprise sales and service capabilities while continuing to offer best-in-class ML services for areas such as vision, speech, and Tensorflow. Azure and Google continue to close the gaps and are often preferable to AWS in situations where enterprises must comply with regulatory and compliance directives for data residency and need to account for possible changes in strategic direction that may require migrating their applications to different cloud providers.

These compliance and data residency considerations are leading organizations to invest in skills and tools for building applications that are easily portable, which improves deployment agility and reduces the risk of vendor lock-in. This creates new sets of challenges in operating applications reliably across varying cloud environments and in ensuring security, governance, and compliance with internal and external policies. In 2019, we invested in MontyCloud which helps companies address the Day 2 operational complexities of multi-cloud environments. We continue to see more opportunities in hybrid and multi-cloud management as regulatory guidelines continue to evolve and organizations emerge from the early stages of executing the shift.

Automated Infrastructure

Automated infrastructure management has been a key enabler for organizations that need to operate in varying cloud and on-premises environments. As containers have grown mainstream, container orchestration with Kubernetes is becoming the most common enterprise choice for operating complex applications. Combining version-controlled configuration and deployment files with operational stability based on control loops has enabled teams to effectively and simultaneously embrace devops and automation while building applications that are portable across on-premises and multi-cloud environments. We invested in Pulumi, which allows organizations to use their programming language of choice in place of Kubernetes YAML files or other domain specific languages, further enabling a unified programming interface with the same development workflows and automated pipelines that development teams are already familiar with.

Machine Learning continues to promise automation of capacity management, failover and resiliency, security, and cost optimization. We see further innovation in ML-powered automation services that will allow developers to focus on applications rather than infrastructure monitoring while enabling IT organizations to identify vulnerabilities, isolate insecure resources, improve SLAs, and optimize costs. While we are already seeing technologies such as autonomous databases offer the promise of automating index maintenance, performance, and cost tuning, we have yet to see wider innovation in this space. We expect some of these capabilities to be natively offered by the public cloud providers. The opportunity for startups will be to offer a solution that leverages unique data from varying sources, delivering effective controls and mitigation, and supporting multi-cloud and on-premises environments.

Serverless

Serverless remains at the leading edge of automated infrastructure, where developers can focus on business logic without having to automate, update, or monitor infrastructure. This is creating opportunities across multiple application segments, from front end applications gaining richer functionality through APIs to backend systems expanding integrations with event sources and gaining richer built-in business logic to transform data. AWS Lambda continues to lead the charge, lending some of its core concepts and patterns to a range of fast-growing applications. However, migrating traditional enterprise applications to an event-driven serverless design can require enterprises to take a larger than anticipated leap. While several pockets of an organization could be experimenting with serverless applications, we continue to look for signs of broader adoption across the enterprise. New approaches that help serverless more effectively address internal policy and compliance requirements would help grease the skids and increase the adoption for many of these serverless applications. Opportunities exist for new programming languages to make it easier to write more powerful functions along with new approaches for managing persistence and ensuring policy compliance. As applications begin to operate across increasingly diverse locations, distributed databases such as FaunaDB will help address the need to persist state in addition to elastically scaling stateless compute resources in transient serverless environments. We are more convinced than ever that serverless will grow to be a dominant application architecture over time, but it will not happen overnight and thus far has been developing more slowly than we forecasted.

Security

With the growth of applications across public cloud regions, remote locations, and individual devices, enterprises are already learning new approaches to secure data at rest, define data perimeters, establish secure network paths. The move to working-from-home has accelerated this evolution, not only from a network perspective but also with a proliferation of bring-your-own-devices (BYOD). We are seeing continued and often increasing activity on several fronts:

- Securing hardware and devices. Our portfolio company Eclypsium protects against firmware and hardware exploits, helping enterprises deal with the new normal of a distributed workforce and an increasingly risky environment of sophisticated attackers. We expect to see more companies realizing the need for firmware and hardware protection as well as broader opportunities around next generation endpoint protection solutions to support work-from-home, BYOD, and the now perimeter-less enterprise.

- Secure computing environments. New virtualization technologies such as Firecracker using languages such as Rust are already delivering security and performance in constrained capacity environments. This is particularly valuable for the next generation of applications designed for low latency interactions with end users around the world. With Web Assembly (WASM), code written in almost any popular language can be compiled into a binary format and executed in a secure sandboxed environment within any modern browser. This can be valuable when optimizing for resource hungry tasks such as processing image or audio streams where Javascript isn’t the right tool for the required performance.

- Securing data in use. While cryptographic methods can secure data at rest and in motion, these methods alone may be inadequate to protect data in use when it sits unencrypted in system memory. Secure enclaves provide an isolated execution environment that ensures that data is encrypted in memory and decrypted only when being used inside the CPU. This enables scenarios such as processing sensitive data on edge devices and aggregating insights securely back to the cloud.

- Data privacy. Automated data privacy remains a challenge for companies of all sizes. GDPR and CCPA has resulted in unicorns such as OneTrust (who just acquired portfolio company Integris) as more countries adopt and implement similar regulations. Organizations around the world across industry verticals will require new workflows and services to store and access critical data as well as address an enduring business priority of understanding various data attributes – where it lives, what it contains, and what policies must apply to various usage patterns.

- Securing distributed applications. Traditional approaches to securing applications that were designed for monolithic applications continue to be upended by distributed, microservices-based applications where security vulnerabilities may sit at varying points in the network or component services. Our portfolio company Extrahop’s Reveal(x) product exemplifies the value of deeply analyzing network traffic in order to secure applications. We expect to see this market continuing to expand in the future. We believe that companies can turn managing security from a business risk into a competitive advantage by embracing “SecOps.” SecOps includes building secure applications from the ground up, using secure protocols with end to end encryption by default, building tools to quickly identify and isolate vulnerabilities when they arise, and modernizing the way teams work together integrating security planning and operations directly into development teams. We are interested in new companies that further enable this SecOps approach for customers.

Developer Experiences

Rapid Application Development

Where front end and back end components were historically packaged together, we are seeing these components increasingly decoupled to speed up application development and raise productivity of relatively non-technical users.

For example, developers working on simple web applications, such as corporate websites, marketing campaigns, and small private publications that don’t require complex backend infrastructure, are already realizing the advantages of automated build and deployment pipelines integrated with hosting services. These automated workflows enable developers to see their updates published immediately and delivered blazingly fast through CDNs in SEO friendly ways. Open source Javascript-based frameworks such as GatsbyJS and Next.js can improve application performance by an order of magnitude by simply generating static HTML/CSS at build time or pre-rendering pages at the server instead of client devices. These improvements in application performance combined with ease of deploying to hosting platforms is empowering millions of front-end developers in building new applications.

Content Management Systems (CMS) that store and present the data for these simple web applications have turned ‘headless,’ storing and serving data through APIs that can be plugged into different applications across varying channels. This has enabled non-technical users to simply update their corporate website or product and documentation pages without depending on engineers to deploy updates. This points to a related trend of a rapidly growing API ecosystem that can enrich these ‘simple’ applications with functionality delivered by third party providers.

In fact, workflows (business activities such as processing customer orders, handling payments, adding loyalty points once a purchase is complete, etc.) in modern enterprises are increasingly implemented by calling a set of different (often 3rd-party) services that could be implemented as serverless functions or in other forms. While each service is independent and does not have any context of any other service, business logic dictates the order, timing, data, etc. with which each service should be called. That business logic needs to be implemented somewhere – using code – and the scheduling of each constituent service needs to be done by an orchestration engine. A workflow engine is exactly that – it stores and runs the business logic when triggered by an event and orchestrates the underlying services to fulfill that workflow. Such an engine is essential to build a complex, stateful, distributed application out of a collection of stateless services. The rapidly growing popularity of open source workflow engines such as Cadence (from Uber) is a good testament of this trend and we expect to see much more activity in this space going forward.

Everything as an API

Whether it’s a single page application with a mobile front end or a microservice that’s part of a complex system, APIs are enabling developers to reuse existing building blocks in different contexts rather than build the same functionality from scratch. “Twilio for X” has become shorthand for businesses that turn a frequently needed service into an easy to use, reliable, and affordable API that can be plugged into any distributed application. While Twilio (SMS), Stripe (payments), Auth0 (authentication), Plaid (fintech) and Sendgrid (emails) are already examples of successful API-focused companies, we continue to see more interesting companies in this area such as daily.co (adds 1-click video chat to any app/site), Sila (Madrona portfolio company providing ACH and other fintech back-end services as an API), and many more. As the API economy grows, so does the need for developers to easily create, query, optimize, meter, and secure these APIs. We are already seeing technologies such as GraphQL driving significant innovation in the API infrastructure and expect to see many more opportunities in this space.

AI/ML Infrastructure

Data Preparation

Data preparation remains the largest drain on productivity in data science today. Merging data from multiple sources, cleansing and normalizing training data, labeling and classifying this data, and compensating for sparse training data are common pain points that we hear from customers and our portfolio companies. Vertical applications that mine unstructured data is a large investment theme and reflected in Madrona investments such as intelligent contract management solution, Lexion, as well as in significant social challenges such as identifying and moderating misleading or toxic online content. Technologies such as Snorkel that help engineers quickly label, augment and structure training datasets hold a lot of promise. Similarly, tools such as Ludwig make it easier to train and test deep learning models for citizen data scientists and developers. These are examples of tools beginning to address the broader need for better and more efficient means of preparing data for effective ML models.

Data Access & Sharing

Another key challenge relates to developing and publishing data catalogs with the parallel challenge of accessing critical data in secure ways. Often superficial policies and access controls limit the extent to which scientists are able to use sensitive data to train their models. At times, the same scientist is unable to reuse the data that they used for a previous model experiment. We see data access patterns differing across different steps in the model development workflow, indicating the need for data catalog solutions that provide built-in access controls as enterprises begin to consolidate data from a rapidly growing set of sources. This challenge of federating and securing data across organizations while ensuring privacy – whether partners, vendors, industry consortia, or regulatory bodies – is an increasingly important problem that we are observing in industries such as healthcare, financial services, and government. We see opportunities for new techniques and companies that will arise to enable this new “data economy.”

Observability & Explainability

As the use of machine learning models explodes across all facets of our lives, there’s an emerging need to monitor and deliver real-time analytics and insights around how a model is performing. Just as a whole industry has grown around APM (application performance management) and observability, we see an analogous need for model observability across the ML pipeline. This will enable companies to increase the speed at which they can tune and troubleshoot their models and diagnose anomalies as they arise without relying on their chief data scientists to root cause issues and explain model behavior. Explaining model behavior may sometimes be straightforward, such as in some medical diagnostic scenarios. In other cases, the need for underlying reasoning could be driven by regulation/compliance, customer requirements, or simply a business need to better understand the results and accuracy of model predictions. So far, explaining model predictions has largely been an academic exercise, though interesting new companies are emerging to operationalize this functionality in production for their customers.

Computer Vision and Video Analytics

The use cases for better, faster, and more accurate computer vision and analysis of video continue to proliferate. The COVID pandemic has highlighted more remote sensing scenarios and the use of robotics in scenarios ranging from cleaning to patient monitoring. Analyzing existing video streams for deep fakes is front and center in consumer consciousness while business scenarios for video analytics in media and manufacturing efficiency are promising new areas. Converting video streams to a visual object database could soon enable ‘querying’ a video stream for, say, the number of cars that crossed a given intersection between 10:00 to 10:15am. While entrepreneurs need to ethically navigate the privacy concerns around video analysis, we feel there will be numerous new company opportunities in this area.

Model Optimization for Diverse Hardware

The hyperscale cloud providers continue to release new compute instances and chips optimized for specific workloads, particularly for machine learning. Aiming to realize the desired performance on these specialized instances in any cloud environment or edge location as well as a range of hardware devices, businesses need a path to optimize their models to run efficiently on diverse hardware platforms. We recently invested in an exciting new company, OctoML, that builds on Apache TVM (an open source project created by OctoML’s founders), offering an end to end compiler stack for models written in Keras, MXNet, PyTorch, TensorFlow, and other popular machine learning frameworks. We continue to believe that hardware advances in this space will create new investment opportunities for applications across domains such as medical imaging, genomics, video analytics, and rich edge applications.

Vertical-specific Infrastructure

The Impact of 5G

Major wireless providers have begun rolling out 5G services while cloud providers such as AWS (with Wavelength) and Azure ($1B+ acquisitions of Affirmed Networks and Metaswitch) have been investing in supporting software services. Investments in next generation telecom infrastructure could provide significant opportunities for operators to move to virtual network appliances that previously required specialized hardware devices as well as expensive operations and support systems to provision these services. Further, the greater bandwidth and software-defined network infrastructure being built for 5G should create a variety of new opportunities for startups such as (a) network management for enterprises including converged WiFi/5G networks, (b) the harnessing and orchestration of new data (what will be connected and measured that never has before?), (c) new vertical applications and/or new business models for existing apps, and (d) addressing global issues of compatibility, coordination, and regulation. Like previous wireless network standard upgrades, the full move to 5G and its impacts will undoubtedly take a number of years to be fully realized. That being said, given current rollouts in key geographies, we expect the software ecosystem around 5G to coalesce fairly rapidly, creating new company opportunities in both the near and medium term.

Continued Proliferation of IoT

Relatedly, we expect 5G to push the wave of digitization beyond the inherently data-rich industries such as financial services and into more industrialized sectors such as manufacturing and agriculture. The Internet of Things (IoT) will capture the data in these sectors and is likely to result in billions of sensors being attached to a variety of machines. Earlier this year we invested in Esper.io that helps developers manage intelligent IoT devices, extending the type of DevOps functionality that exists in the cloud to any edge device with a UI, which are increasingly Android-based. Industrial IoT also continues to emerge into the mainstream with manufacturing companies investing in ML and other analytics solutions after years of discussion. We think companies taking a vertical approach and providing applications tailored to the specific need of a certain industry will grow most quickly.

Vertical-Specific Hardware+Software

We are also seeing several verticals requiring specialized hardware for key business functions. For example, electronic trade execution services must provide deterministic responses to orders placed within a small window of time. In addition to requiring hardware-based time sync across the network, participants often use specialized hardware including FPGAs to execute their algorithms. FPGAs are also common in high speed digital telecom systems for packet processing and switching functions. Similarly, FPGA-based solutions are being adopted across healthcare research disciplines. FPGA’s can accelerate identifying matches between experimental data and possible peptides or modified peptides that can be evaluated in near real time, enabling deeper investigation, faster discovery, and more effective diagnostics to improve healthcare outcomes. We are realizing that a long tail of such applications across verticals would benefit from a cloud-based “hardware-as-a-service” that offers a path for almost every application to run in the cloud.

Business Model Innovation

While this post has been largely organized around business needs that are being met by technology innovations and new product opportunities, we are also interested in investing in companies that take advantage of related business model innovations that these technological advances in enterprise infrastructure have enabled. For instance, the move to the cloud allows companies to provide consumption-based pricing, self-service models, “as-a-service” versions of products, freemium SKUs, rapid POCs and product trials, and direct reach to end-user developers or operations team members. We are equally interested in founders and companies that have found new ways to go-to-market and efficiently identify and reach customers.

Relatedly, the continued adoption of open source as the predominant software licensing approach for enterprise infrastructure has created new opportunities for business model innovation, significantly evolving the traditional “paid support” model for open source to open core and “run it for you” approaches. Enterprises are increasingly demanding an open source option because of the typical benefits of lower TCO and control. Developers (and vendors) love open source because of the bottoms-up adoption that creates validation and virality. At the same time, the bigger platforms (cloud providers) are embracing open source technologies on their platform often in a manner that provides an inherent tension with commercial companies built around those same open source technologies. We continue to strongly believe that having a differentiated, unique value proposition on top of an open source project is critical for a commercial company to be built. It is that differentiated value proposition that ultimately creates a strong moat and defensibility from the platform companies supporting open source technologies on their stack. We anticipate that all these factors, plus this intrigue of heightened tensions between hyperscale clouds and open source vendors, will add up to continued opportunity in the dynamic world of open source in the years to come.

[1] 451 Research, April 9, 2020, “COVID-19: Cloud-native impacts.” Brian Partridge, William Fellows, et. al.