Well, this is fun. The current pace of activity in artificial intelligence is nothing short of astonishing. Generative AI applications, and a larger category of apps that apply complex reasoning to data, are proliferating based on foundation models. The apps span from the practical (accelerating code development and testing, legal contracting, and the production of Oscar-nominated films) to the fun (multimodal generative rap battles) to the thought-provoking (at or near passing levels on the U.S. medical licensing exam). And the underlying model capabilities, model accuracy, and infrastructure are evolving at least as quickly.

If this all feels “different,” that’s because it is. The emergence of the cloud a generation ago provided computing power previously not possible, enabling new areas of computer science, including transformer models. That model architecture demonstrated that you can use cloud compute to build much larger models that generalize better and are capable of new tasks, such as text and image generation, summarization, and categorization. These larger models have shown emergent capabilities of complex reasoning, reasoning with knowledge, and out-of-distribution robustness, none of which are present in smaller, more specialized models. These big ones are called foundation models because developers can build applications on top of them.

But notwithstanding all the activity and furious pace of innovation, it remains clear the future isn’t happening fast enough with foundation models and generative AI. Builders face an unappealing choice: easy to build but hard to defend — or the opposite. In the first option, foundation models allow developers to create apps in a weekend — or in minutes — which used to take months. But the developers are limited to those proprietary models’ off-the-shelf capabilities, which other developers can also use, meaning the developers must be creative to find a source of differentiation. In the second option, developers can extend the capabilities of open-source model architectures to build something novel and defensible. But that requires a galactic level of technical depth, which too few teams possess. That’s the opposite of the direction we need to go as an industry — we need more power in more hands, not even greater concentration.

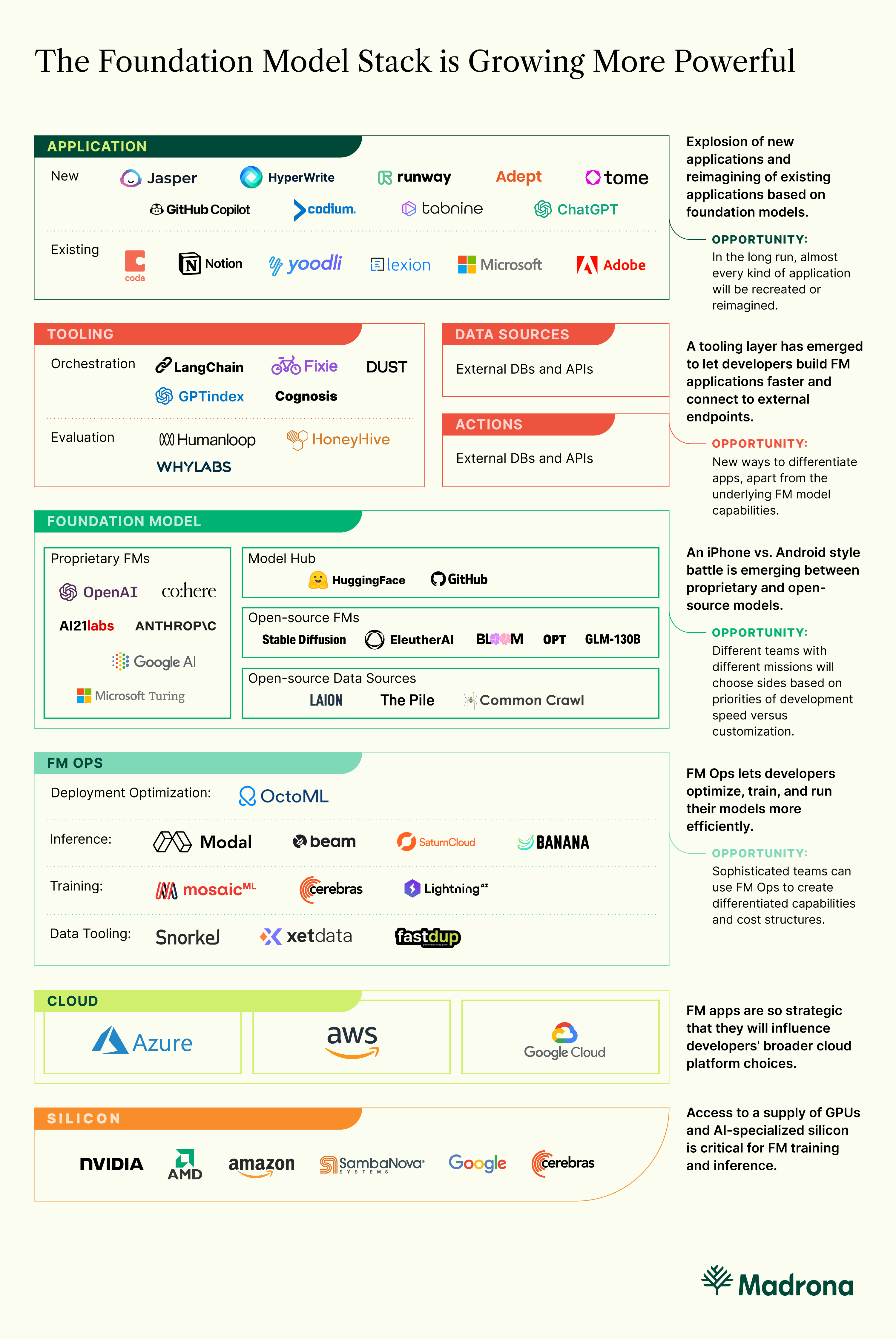

But if we consider large-scale foundation models as a new application platform, drawing out the broader technical stack highlights that in those challenges are opportunities or founders. We wrote an article late last year describing this stack and predicting the emergence of a tooling layer. The stack has evolved so quickly (and a tooling layer has so rapidly started to form!) that it’s now worth another look.

Looking at the state of the foundation model stack today, we see three opportunities:

- Build Novel Applications: The most technically sophisticated teams have wide-open frontiers ahead of them. There is so much innovation to be done, especially in information retrieval, mixed-modality, and training/inference efficiency. Teams in this area can push the boundaries of science to create applications that were not possible before.

- Find Differentiation: Teams with great ideas but only early technical abilities suddenly have access to tooling that makes it possible to build richer applications with longer memories/context, richer access to external data sources and APIs, and the ability to evaluate and stitch together multiple models. That provides a broader set of avenues for founders to build novel and defensible products, even if they use widely available technologies.

- Build Tools: Teams who like infrastructure have a high-leverage opportunity to build tooling in orchestration (developer frameworks, data sources and actions, evaluation) and foundation model operations (infrastructure and optimization tooling for deployment, training, and inference). More powerful and flexible tooling will empower existing builders and make the foundation model stack accessible to a much broader population of new builders.

Foundation Models

The unappealing tradeoffs that foundation model developers face — easy to build new apps but hard to defend them, or the opposite — is rooted in how the core models are getting built and exposed. Builders must choose sides today in an iPhone/Android, Windows/Linux style battle, with painful tradeoffs on each side. On one side, we see highly sophisticated, rapidly evolving, proprietary models from OpenAI, co:here, and AI21 — we can add Google to the list because they have been working on these models longer than anyone else and clearly plan to externalize them. On the other side are open-source architectures such as Stable Diffusion, Eleuther, GLM130B, OPT, BLOOM, Alexa Teacher Model, and more all organized on Huggingface as a community hub.

Proprietary models — These are owned by providers with deep pockets and technical sophistication, which means they can offer best-in-class model performance. The off-the-shelf nature of their models also means they’re easy for developers to get up and running. Azure’s new OpenAI Service makes it easier than ever to get started, and we expect that to only accelerate developers’ pace of experimentation. These folks are working on cost, too – OpenAI reduced its prices by 60% in late 2022, and Azure matches those same prices. But cost is still high enough here to limit the set of sustainable business models. We see early examples of per-seat licenses and consumption-based pricing, for example, and those can work. But ad-supported business models might not generate enough revenue to cover this level of cost.

Open-source models — Their performances are not quite as high as the proprietary ones, but they have improved dramatically over the past year. More important is the flexibility that technically sophisticated builders can have to extend those architectures and build differentiated capabilities that are not yet possible with the proprietary models (this is one of the things we love about Runway, a next-generation content generation suite that offers real-time video-editing, collaboration, and more. In order to support all that functionality, Runway continues to make deep contributions to the science of multimodal systems and generative models to unblock faster feature development for Runway’s own customers).

The tension in foundation models has shifted to the iPhone/Android debate between proprietary and open-source models. The strengths of proprietary models are performance and ease of getting started. The strengths of open-source models are flexibility and cost efficiency. Builders can safely assume that each camp will invest in addressing its weaknesses (making OSS models easier to get started and making it possible to extend OpenAI models more deeply), even as they also lean into their strengths.

Tooling / Orchestration

More powerful and flexible tooling will empower existing builders and make the foundation model stack accessible to a much broader population of new builders.

We wrote back in October 2022 that “foundation models don’t ‘just work’ because they’re meant to be only one component of a broader software stack. Coaxing the best possible inferences from the foundation models today requires each application developer to take many ancillary steps.” Today, we indeed see intense developer focus at this level of the stack. Some of the coolest, highest-leverage work will happen here in the coming months, especially in developer frameworks, data sources and actions, and evaluation.

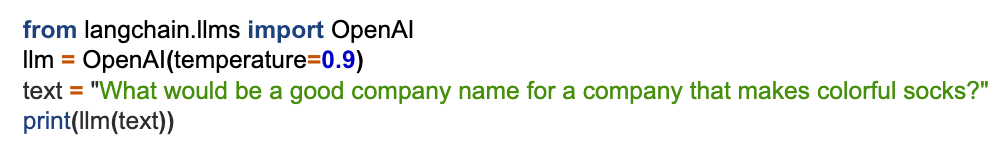

Developer Frameworks — History teaches us that frameworks (dbt, Ruby) are useful for snapping together the pieces of a larger application. Frameworks for foundation models will make it easy to combine things like context across multiple calls, prompt engineering, and choice of foundation model (or multiple models in sequence). Researchers have begun to quantify how powerful that can be for building larger applications around foundation models, including models whose knowledge is “frozen” in time. LangChain, Dust.tt, Fixie.ai, GPT Index, and Cognosis are the projects catching developers’ attention in this part of the stack. It’s hard to describe how easy it is to get started with some of these frameworks. But it’s easy to demonstrate, so we’ll do that now. Here are four lines of getting started code from LangChain’s developer guide:

That kind of developer framework makes it so easy to get started with foundation models that it’s almost fun. Astute observers might notice that with this code above, it would be almost effortless to replace the underlying LLM/FM for an app that has already launched if the developer wishes to do so. Those observers would be correct! In the bigger picture, making development easier tends to bring more developers into the fold and accelerate the creation of new apps. There is a furious pace of innovation at the tooling level, which creates opportunities both for builders of tools and developers who will use the tools to create new apps.

Data Sources and Actions — Today, foundation models are limited to reasoning about the facts on which they were trained. But that’s super constraining for application developers and end users that need to make decisions based on data that changes extremely fast. Think about the weather, financial markets, travel, supply inventory, etc. So, it will be a big deal when we figure out “hot” information retrieval, where instead of training or editing the model, we instead let the model call out to external data sources and reason about those in real time. Google Research and Deepmind published some cool research in this direction, and so did OpenAI. So, retrieval is coming, especially given the blistering pace at which research currently reaches production in this area.

The developer frameworks mentioned above all anticipate this evolution of foundation model science and support some concept of an external data source. Along similar lines, the developer frameworks also support a concept of downstream actions – calls to an external API such as Salesforce, Zapier, Google Calendar, or even an AWS Lambda serverless compute function. New kinds of foundation model applications are becoming possible with these data and action integrations that would have been difficult or impossible previously – especially for early teams building on top of proprietary models.

Evaluation — We wrote back in October 2022 that “foundation models demand to be treated with kid gloves because we never quite know what they will say or do. The providers of these models, and the application developers who build on top of them, must accept the responsibility of managing those risks.” Developers are quickly growing more sophisticated about this. Academic benchmarks are an important first step in evaluating model performance. But even the most sophisticated benchmarks like HELM are imperfect because they are not designed to address the peculiarities of any one group of users or any one specific use case.

The best test sets come from end users. How many of your generated suggestions were accepted? How many conversational “turns” did your chatbot have? How long did users dwell on a particular image, or how many times did they share it? These kinds of inputs describe a pattern in aggregate, which developers can then use to tailor or interpret a model’s behavior to maximum effect. HoneyHive and HumanLoop are two examples of companies working to help developers iterate on underlying model architectures, modify prompts, filter and add novel training sets, and even distill models to improve inference performance for specific use cases.

Tooling / FMOps

Compute is the primary cost driver of foundation model companies and constrains the business models they can choose. A new generation of tooling and infrastructure for deployment optimization, training, and infrastructure, is helping builders to operate more efficiently to unblock new business models.

Foundation models have immense compute requirements for training and inference, requiring large volumes of specialized hardware. That is a significant contributor to the high costs and operational constraints (throughput and concurrency) that application developers face. The largest players can find the cash to accommodate — consider the “top 5” supercomputer infrastructure Microsoft assembled in 2020 as part of its OpenAI partnership. But even the mighty hyperscalers face supply chain and economic constraints. Training, deployment, and inference optimizations are, therefore, critical areas of investment where we see tons of innovation and opportunity.

Training — Open-source foundation models are becoming easier to modify and retrain than ever before. Whereas the largest foundation models can cost $10M or more to train, developments such as the Chinchilla and Beyond Neural Scaling Laws papers show that powerful models can be trained for $500,000 or even less. This means more companies can do it themselves. Today, practitioners can access large-scale datasets such as LAION (images), PILE (diverse language text), and Common Crawl (web crawl data). They can use tools such as Snorkel, fastdup and xethub to curate, organize and access those large datasets. They can access the latest and greatest open-source model architectures on HuggingFace. And they can use training infrastructure from Cerebras, MosaicML, and more to train those models at scale. These resources are powerful for making use of the latest model architectures, modifying the code that defines those architectures, and then training private models based on a combination of public and proprietary data.

Deployment and Inference — Ongoing inference costs have not dropped as sharply as training costs. Most of the compute cost will end up in inference, not training. The inference costs end up creating an even more significant constraint on builders because they limit the types of business models that a company can choose. Deployment frameworks such as Apache TVM and techniques such as distillation and quantization can all help, but these require quite the technical depth to use. OctoML (from the creator of TVM) offers managed services to reduce cost and deployment time and maximize utilization across a broad set of hardware platforms. That makes these kinds of optimizations available to a greater set of builders and also lets the most technically sophisticated builders work more efficiently. A set of hosted inference companies, such as Modal Labs, Banana, Beam, and Saturn Cloud, also aim to make inference more cost-effective than running directly on a hyperscaler such as AWS, Azure, or GCP.

#HereWeGo

We’ve only begun to scratch the surface of what is possible with large-scale foundation models, broadly across the stack. Big tech companies and well-capitalized startups are investing heavily in even bigger and better models, tooling, and infrastructure. But the best innovations require fearless technology and product inspiration. We love to meet teams with both of those, but there are too few on this earth. The pace and quality of innovation around foundation models will be constrained until the stack enables teams with a spike on one side or the other to contribute. All of this work will be done by a combination of big tech, founders, academics, developers, open-source communities, and investors. At the same time, all this innovation carries with it the responsibility of considering the ethical impacts of FM use cases, including potential unintended consequences, and putting necessary guardrails in place. This is at least of equal importance to advancing the technology itself.

It’s up to all of us to make the future happen faster in AI-driven applications. And we are excited to see what new ideas entrepreneurs come up with to help unleash the true power of foundation models and enable the widespread innovation and influence everyone expects.

At Madrona, we have already made multiple investments in this space and are committed to helping make the future happen faster. If you are a founder building applications, models, tooling, or something else in the space of foundation models and would like to meet, get in touch at: [email protected] and [email protected]