Today we are announcing our investment in Fauna.

We believe that the next generation of applications will be serverless. Those applications can be completely new, “greenfield” applications – like a dynamic Jamstack web application, or they can be new functionality that is added to an existing application or service but leverages a rich mobile or web client with a serverless back end. We think of this model of development as the “Client-Serverless” model, and this represents the 4th generation of application architectures.

Fauna helps developers to simplify code, reduce (development and operational) costs and ship faster by replacing their data infrastructure with a single serverless API that is easy to use, maintenance-free, yet full-featured. Fauna is the data API for Client-Serverless applications.

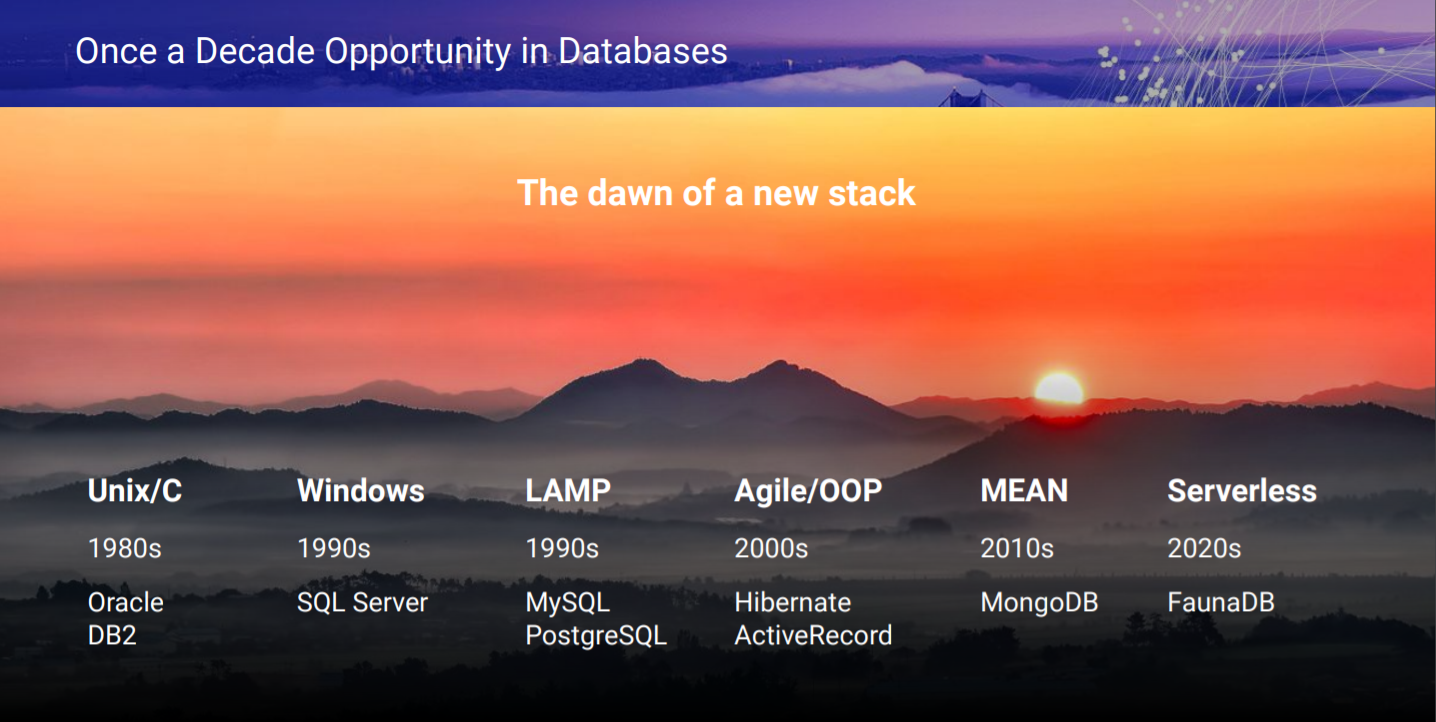

As computing platforms evolve, new opportunities for developer and application platform products are created. Some patterns (like the client/server era) were brought about by new technologies (Windows, SQL Server and the Windows hardware ecosystem) while others were as existing technologies aligned against important use cases (such as the LAMP stack for web applications) and just became “the best way to do it”. At any layer of the platform stack, if you can align with (or better yet create) one of these rising tides it can help you scale more efficiently. Changes in infrastructure also create opportunity, such as what we’ve seen in the Data Warehouse market where Snowflake’s forward-looking bet on exploiting elasticity and scale of cloud infrastructure have enabled them to disrupt a large and existing market.

The database market is massive and there are always opportunities for new platforms to emerge and differentiate. However, this has proven difficult as it is expensive to build a new database and even more expensive to sell one. Nowadays, decisions around infrastructure are more and more driven by developers and so any new platform needs to win the hearts and minds of developers first and foremost. Without this, the only way to land new customers is going to be through a deep technical sales process. You can argue that most of the NoSQL era (Mongo for example) came about via more effectively targeting developers – via Open Source and having more approachable platforms that were on trend for where applications were headed.

So, it is somewhat of a straightforward formula for success in the database market: build a database and latch on to the most important trends and developer technologies. This is what Fauna has done and why they are so well positioned.

Developers are moving en masse to serverless architectures for new applications, marking the dawn of serverless as the next tool chain for building global, hyperscale apps. FaunaDB plugs in seamlessly into this new ecosystem and uniquely extends the serverless experience all the way to the database. This developer journey began with the move to the cloud, but Fauna has correctly identified serverless as the next frontier for cloud and has succeeded in building the database of choice for this new era.

FaunaDB is unique in the market combining the following attributes into a single data API:

- Focus on developer productivity: Web-native API with GraphQL, custom business logic and integration with serverless ecosystem for any framework

- Modern, no-compromise programmable platform: Underlying globally distributed storage and compute engine that is fast, consistent and reliable, with a modern security infrastructure

- No database operations: Total freedom from database operations at any scale

Consequently, Fauna has seen its developer community grow quickly to over 25,000 users over the past year and has developed one of the strongest brands within the serverless and Jamstack ecosystem.

While it is great to identify a massive opportunity with a differentiated product, the most important part of investing in a company is the team.

The two co-founders, Evan Weaver and Matt Freels are amazing engineering and product leaders who were instrumental in building a scalable, distributed system at Twitter, where they witnessed the signs of where the world was moving and went on to build Fauna to fulfill their vision. They built Fauna as a 100% remote team from day 1 with the right focus of communication and collaboration to enable a high performing team aligned on a common vision. With a lot of the tech industry talking about working remotely currently, Evan and Matt have been leaders in adopting the “future of work” and setting up a strong culture for success as the team continues to scale in the new post COVID-19 era.

Eric Berg, who recently joined Fauna as the CEO is somebody that I have worked with at Microsoft in the past, was a key leader at one of Madrona’s portfolio companies (Apptio) and most recently the Chief Product Officer at Okta. During his eight-year Okta experience, he took a pre-Series A company through IPO, developing an identity product no one was sure they needed into a huge success.

And of course, I am very excited to have the opportunity to work together with Bob Muglia again as the Chairman of the Board at Fauna. I have had the opportunity to work together with Bob over the decades, initially at Microsoft and more recently at Snowflake and am thrilled to be able to work with him again here. Bob and I share a common vision of Client-Serverless being the next generation application model – applications are composed of Internet connected services using standard REST and GraphQL APIs, the Jamstack and the browser being the universal client and a globally distributed database as a cornerstone of this ecosystem.

With such a stellar team, a great product and a massive potential opportunity, it is a no-brainer for me to want to be a part of this journey and that’s one of the main reasons we decided to invest in Fauna. Looking forward to this journey!