Enterprise infrastructure has been one of the foundational investment themes here at Madrona since the inception of the firm. From the likes of Isilon to Qumulo, Igneous, Tier 3, and to Heptio, Snowflake and Datacoral more recently, we have been fortunate to partner with world-class founders who have reinvented and redefined enterprise infrastructure.

For the past several years, with enterprises rapidly adopting cloud and open source software, we have primarily focused on cloud-native technologies and developer-focused services that have enabled the move to cloud. We invested in categories like containerization, orchestration, and CI/CD that have now considerably matured. Looking ahead, with cloud adoption entering the middle innings but with technologies such as Machine Learning truly coming into play and cloud native innovation continuing at a dizzying pace, we believe that enterprise infrastructure is going to get reinvented yet again. Infrastructure, as we know it today, will look very different in the next decade. It will become much more application-centric, abstracted – maybe even fully automated – with specialized hardware often available to address the needs of next-generation applications.

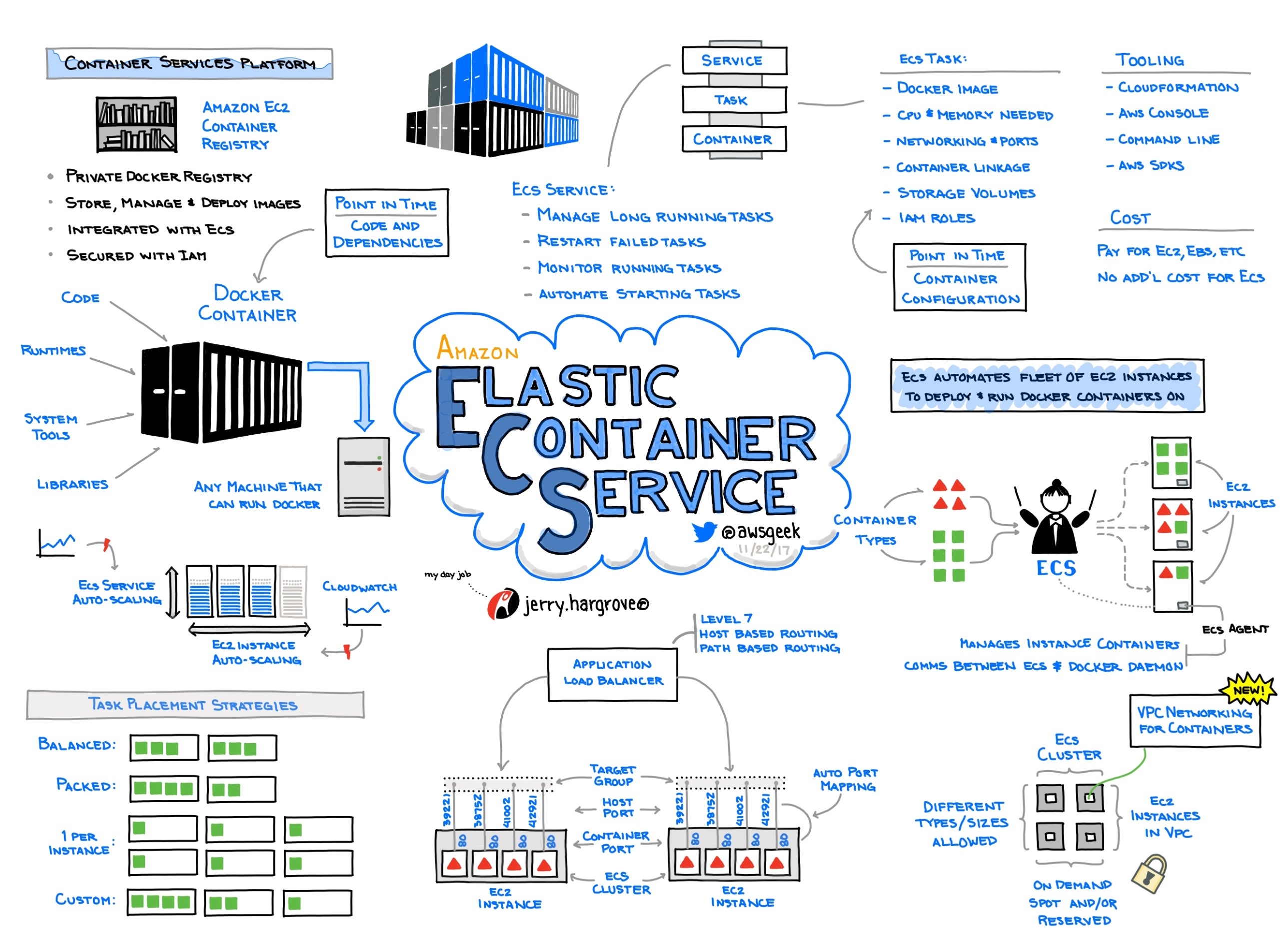

As we wrote in our recent post describing Madrona’s overall investment themes for 2019, this continued evolution of next-generation cloud infrastructure remains the foundational layer of the innovation stack against which we primarily invest. In this piece, we go deeper into the categories that we see ourselves spending the most time, energy and dollars over the next several years. While these categories are arranged primarily from a technology trend standpoint (as illustrated in the graphic above), they also align with where we anticipate the greatest customer needs for cost, performance, agility, simplification, usability, and enterprise-ready features.

Management of cloud-native applications across hybrid infrastructure

2018 was undeniably the year of “hybrid cloud.” AWS announced Outposts, Google released GKE On-Prem and Microsoft beefed up Azure Stack (first announced in late 2017). The top cloud providers officially recognized that not every workload will move to the cloud and that the cloud will need to go to those workloads. However, while not all computing will move to public clouds, we firmly believe that all computing will eventually follow a cloud model, offering automation, portability and reliability at scale across public clouds, on-prem and every hybrid variation in between.

In this “hybrid cloud forever” world businesses want more than just the ability to move workloads between environments. They want consistent experiences so that they can develop their applications once and run anywhere with complete visibility, security and reliability — and have a single playbook for all environments.

This leads to opportunities in the following areas:

- Monitoring and observability: As more and more cloud-native applications are deployed in hybrid environments, enterprises will demand complete monitoring and observability to know exactly how their applications are running. The key will be to offer a “single pane of glass” (complete with management) across multiple clouds and hybrid environments, thereby building a moat against the “consoles” offered by each public cloud provider. More importantly, the next-generation monitoring tools will need to be intelligent in applying Machine Learning to monitor and detect – potentially even remediate – error conditions for applications running across complex, distributed and diverse infrastructures.

- SRE for the masses: According to Joe Beda, the co-founder of Heptio, “DevOps is a cultural shift whereby developers are aware of how their applications are run in a production environment and the operations folks are aware and empowered to know how the application works so that they can actively play a part in making the application more reliable.” The “operations” side of the equation is best exemplified by Google’s highly trained (and compensated) Site Reliability Engineers (SRE’s). As cloud adoption further matures, we believe that other enterprises will begin to embrace the SRE model but will be unable to attract or retain Google SRE level talent. Thus, there will be a need for tools that simplify and automate this role and help enterprise IT teams become Google-like operators with the performance, scalability and availability demanded by enterprise applications.

- Security, compliance and policy management: Cloud, where enterprises lose total control over the underlying infrastructure, places unique security demands on cloud-native applications. Security ceases to be an afterthought – it now must be designed into applications from the beginning, and applications must be operated with the security posture front and center. This has created a new category of cloud native security companies that are continuing to grow. Current examples include portfolio company, Tigera, which has become the leader in network security for Kubernetes environments, and container security companies like Aqua, StackRox and Twistlock. In addition, data management and compliance – not just for data at rest but also for data in motion between distributed services and infrastructures – create a major pain point for CIOs and CSOs. Integris addresses the significant associated privacy considerations, partly fueled by GDPR and its clones. The holy grail is to analyze data without compromising privacy. Technologies such as security enclaves and blockchains are also enabling interesting opportunities in this space and we expect to see more.

- Microservices management and service mesh: With applications increasingly becoming distributed, open source projects such as Istio (Google) and Envoy (Lyft) have emerged to help address the great need to efficiently connect and discover microservices. While Envoy has seen relatively wide adoption, it has acted predominantly as an enabler for other services and businesses such as monitoring and security. With next-generation applications expected to leverage the best-in-class services, regardless of which cloud/on-prem/hybrid infrastructure they are run on, we see an opportunity to provide a uniform way to connect, secure, manage and discover microservices (run in a hybrid environment).

- Streams processing: Customers are awash in data and events from across these hybrid environments including data from server logs, network wire data, sensors and IoT devices. Modern applications need to be able to handle the breadth and volume of data efficiently while delivering new real time capabilities. The area of streams processing is one of the most important areas of the application stack enabling developers to unlock the value in these sources of data in real time. We see fragmentation in the market across various approaches (Flink, Spark, Storm, Heron, etc.) and an opportunity for convergence. We will continue to watch this area to understand whether a differentiated company could be created.

Abstraction and automation of infrastructure

While containerization and all of the other CNCF projects promised simplification of dev and ops, the reality has turned out to be quite different. In order to develop, deploy and manage a distributed application today, both dev and ops teams need to be experts in a myriad of different tools, all the way from version control, orchestration systems, CI/CD tools, databases, to monitoring, security, etc. The increasingly crowded CNCF roadmap is a good reflection of that growing complexity. CNCF’s flagship conference, Kubecon, was hosted in Seattle in December and illustrated both the interest in cloud native technologies (attendees grew 8x since 2016 to over 8,000) as well as the need for increased usability, scalability, and help moving from experimentation to production. As a result, in the next few years, we anticipate that an opposite trend will take effect. We expect infrastructure to become far more “abstracted,” allowing developers to focus on code and letting the “machine” take care of all the nitty gritty of running infrastructure at scale. Specifically, we think opportunities are becoming available in the following areas:

- Serverless becomes mainstream: For way too long, applications (and thereby developers) have remained captive of the legacy infrastructure stack in which applications were designed to conform to the infrastructure and not the other way around. Serverless, first introduced by AWS Lambda, broke that mold. It allowed developers to run applications without having to worry about infrastructure and to combine their own code with best-in-class services from others. While this has created a different concern for enterprises – applications architected to use Lambda can be difficult to port elsewhere – the benefits of serverless, in particular rapid product experimentation and cost, will compel a significant portion of the cloud workloads to adopt it. We firmly believe that we are at the very beginning of serverless adoption and we expect to see a lot more opportunities in this space to further facilitate serverless apps across infrastructure, similar to Serverless.com (toolkit for building serverless apps on any platform) and IOpipe (monitoring for serverless apps).

- Infrastructure backend as code: The complexity of building distributed applications often far exceeds the complexity of the app’s core design and wastes valuable development time and budget. For every app, a developer wants to build, s/he ends up writing the same low-level distributed systems code again and again. We believe that will change and that the distributed systems backend will be automatically created and optimized for each app. Companies like Pulumi and projects like Dark are already great examples of this need.

- Fully autonomous infrastructure: Automating management of systems has been the holy grail since the advent of enterprise computing. However, with the availability of “infinite” compute (in the cloud), telemetry data, and mature ML/AI technology, we anticipate significant progress towards the vision of fully autonomous infrastructure. Even in the case of cloud services, many complex configuration and management choices need be made to optimize the performance and costs of several infrastructure categories. These choices range from capacity management in a broad range of workloads to more complex decisions in specific workloads such as databases. In databases, for example, there has been some very promising research done on applying machine learning to basic configuration all the way to index maintenance. We believe there are exciting capabilities to be built and potentially new companies to be grown in this area.

Specialized infrastructure

Finally, we believe that specialized infrastructure will make a comeback to keep up with the demands of next-general application workloads. We expect to see that in both hardware and software.

- Specialized hardware: While ML workloads continue to proliferate and general-purpose CPUs (and even GPUs) struggle to keep up, new specialized hardware has arrived from Google’s TPUs to Amazon’s new Inferentia chips in the cloud. Microsoft Azure also now offers FPGA-based acceleration for ML workloads while AWS offers FPGA accelerators that other companies can build upon – a notable example being the FPGA-based genomics acceleration built by Edico Genome. While we are unlikely to invest in a pure hardware company, we do believe that the availability of specialized hardware in the cloud will enable a variety of new investable applications involving rich media, medical imaging, genomic information, etc. that were not possible until recently.

- Hardware-optimized software: With ML coming to every edge device – sensors, cameras, cars, robots, etc. – we believe that there is an enormous opportunity to optimize and run models on hardware endpoints with constrained compute, power and/or bandwidth. Xnor.ai, for example, optimizes ML models to run on resource-constrained edge devices. More broadly, we envision opportunities for software-defined hardware and open source hardware designs (such as RISC-V) that enable hardware to be rapidly configured specifically for various applications.

Open Source Everywhere

For every trend in enterprise infrastructure, we believe that open source will continue to be the predominant delivery and license mechanism. The associated business model will most likely include a proprietary enterprise product built around an open core, or a hosted service where the provider runs the open source as a service and charges for usage.

Our own yardstick for investing in open source-based companies remains the same. We look for companies based around projects that can make a single developer look like a “hero” by making her/him successful at some important task. We expect the developer mindshare for a given open source project to be reflected in metrics such as Github stars, growth in monthly downloads, etc. A successful business then can be created around that open source project to provide the capabilities that a team of developers and eventually an enterprise would need and pay for.

Conclusion

These categories are the “blueprints” we have in our minds as we look for the next billion-dollar business in the enterprise infrastructure category. Those blueprints, however, are by no means exhaustive. The best founders always surprise us by their ability to look ahead and predict where the world is going, before anyone else does. So, while this post describes some of the infrastructure themes we are interested in at Madrona, we are not exclusively thesis-driven. We are primarily founder driven; but we also believe that having a thoughtful point of view about the trends driving the industry – while being humble, curious and open-minded about opportunities we have not thought as deeply about – will enable us to partner with and help the next generation of successful entrepreneurs. So, if you have further thoughts on these themes, or especially are thinking about building a new company in any of these areas, please reach out to us!

Current or previous Madrona Venture Group portfolio companies mentioned in this blog post: Datacoral, Heptio, Igneous, Integris, IOpipe, Isilon, Pulumi, Qumulo, Snowflake, Tier 3, Tigera and Xnor.ai