Listen on Spotify, Apple, Amazon, and Podcast Addict | Watch on YouTube.

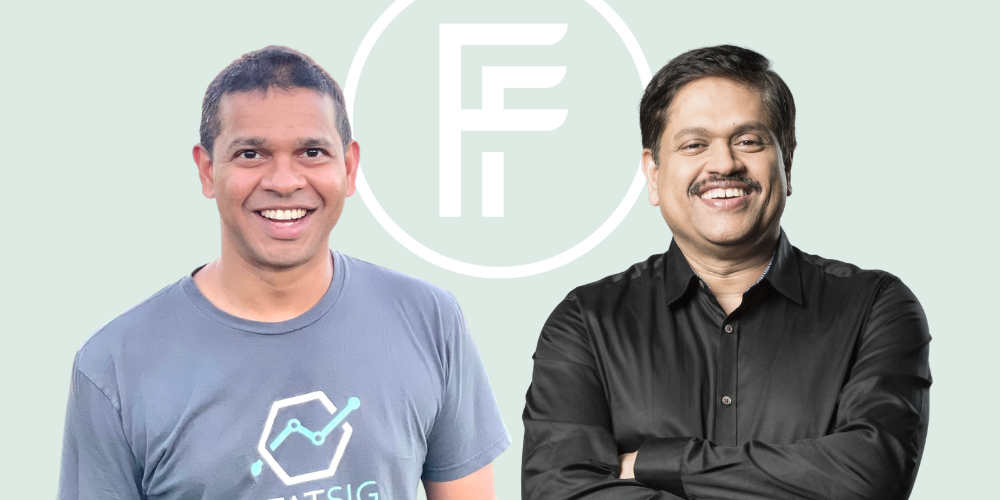

Today Madrona investor Palak Goel has the pleasure of chatting with Glean founder and CEO Arvind Jain. Glean provides an AI-powered work assistant that breaks down traditional data silos, making info from across a company more accessible. It should be no surprise that Glean is touted as the search engine for the enterprise because Arvind spent over a decade at Google as a distinguished engineer where he led teams in Google search. Glean has raised about $155 million since launching in 2019 and was named a 2023 IA40 winner. Palak and Arvind talk about Glean’s journey and the transformative power of enterprise AI on workflows, the challenges of building AI products, how AI should not be thought of as a product but rather as a building block to create a great product, the need for better AI models and tooling, and advice for founders in the AI space, including the importance of customer collaboration in product development, the need for entrepreneurs to be persistent – and so much more!

This transcript was automatically generated and edited for clarity.

Palak: Arvind, thank you so much for coming on and taking the time to talk about Glean.

Arvind: Thank you for having me. Excited to be here.

Palak: To kick things off, in 2023, VCs have invested over a billion dollars into generative AI companies, but I can probably count on one hand how many AI products have actually made it into production. As someone who’s been building these kinds of products for decades, why do you think that is and what should builders be doing better?

Arvind: People are doing a great job building products. I’ve seen a lot of really good ideas out there leveraging the power of large language models. Still, it takes time to build an enterprise-grade product that can be reliably used to solve specific business problems. When AI technology allowed people to create fantastic demos that you can amazingly solve problems, the expectation in the market went up very quickly. Here’s this magical technology, and it will solve all of our problems. That was one of the reasons we went through this phase of disappointment later on, which is it turns out that AI technology, while super powerful, is extremely hard to make work in your business.

One of the big things for enterprise AI to work is that you connect your enterprise knowledge and data with the power of large language models. It is hard, clunky, and takes effort, so I think we need more time. It’s great to see this investment in this space because you’re going to see fundamentally new types of products that are going to get built and which are going to add significant value to the enterprise. I expect we will see a lot of success in 2024.

Palak: What are some of those products or needs that you feel are extremely compelling or products you expect to see? I’m curious about the Glean platform. From that perspective, how are you enabling some of those applications to be built?

Arvind: If you think about enterprise AI applications, a common theme across all of them is that you connect your enterprise data and knowledge with the power of LLMs. Given a task that you’re trying to solve, you have to assemble the right pieces of information that live within your company and provide it to the LLMs so that the LLM can then do some work on it and solve the problem in the form of either providing you with an answer or creating some artifact that you’re looking for.

From a Glean perspective, that’s the value that we are adding. We make connecting your enterprise data easy with the power of large language model technology. We want to take all the work of building data, ETL pipelines, figuring out how to keep this data fresh and up to date, and setting up a retrieval system where you can put that data in so that you can retrieve it at the time a user wants to do something.

We want to remove all of that technical complexity of building an AI application from you and instead give you a no-code platform that you can use and focus more on your business or application logic and not worry about how to build a search engine or a data ETL pipeline. We will enable developers inside the enterprise to build amazing AI applications on top of our platform.

The use cases I always hear from our enterprises are, first, for developers, you already know how the code copilots add a lot of value. Statistics show that about 30% of all the code is written by copilots. You’re starting to see good productivity wins from AI for software development. You can use these models for test-case generation, and there are a lot of opportunities. We’re still improving that 10% of the time that developers spend.

Developers are not spending most of their time writing code. Most of the time, they’re figuring out how to solve a problem or a design for the solution. Most of the focus is on the scope of AI. AI focuses on 10 or 20% of the time a developer spends to bring some efficiencies there.

Next year, we will see many more sophisticated tools to increase productivity in that entire developer lifecycle. Similarly, for support and customer care teams, AI is starting to play a significant role in speeding up customer ticket resolution and answering tickets that customers have. These are some of the big areas in which we see a lot of traction today.

Palak: As developers move from prototyping to production, do you think it’s a lack of sophistication in the tooling around some of these models or is it the models themselves that need to get better?

Arvind: It’s both. AI models are smart, but everybody has seen how they can hallucinate. They can make things up because, fundamentally, they are probabilistic models. All the model companies are starting to see, whether in an open-domain or closed-domain models, that incredibly fast progress is happening there to make these models better, more predictable, and more accurate. At the same time, a lot of work is happening on the tooling side. For example, if I’m building an AI application, how do I ensure it’s doing a good job? The eval when we started earlier this year when we built an application, all the evaluation of that system was manual. Our developers were spending the majority of their time trying to evaluate whether the system was doing well because there was no way to sort of automate that process. Now, you’re seeing a lot of development in AI evaluation systems.

Similarly, there is infrastructure on models, making sure you’re not actually putting sensitive information into the models or taking sensitive output and showing it back to the user, so privacy and security filtering, that plumbing layer is getting built in the industry right now.

There’s a lot of work on post-processing of AI responses because when the models are unpredictable, how can you take the output of the models and then apply technologies to find out that something like that happened? If it hallucinates, then you suppress those responses. The entire toolkit is undergoing a lot of development. You can’t build a product in three or six months and expect it to solve real enterprise problems, which was an expectation in the market. You have to spend time on it. Our product has worked because we were on this journey for the last five years, so we didn’t start building it in November of 2022 after ChatGPT and suddenly expected it to work for our enterprises. This technology takes time to build.

Palak: As somebody who has gone on that journey from prototype to production, what advice do you have for founders that are starting similar journeys and are looking to be part of these conversations with big enterprise customers?

Arvind: A generic piece of advice for folks doing this for the first time is that building a product is always hard. There are a lot of times when you’ll feel that, oh, maybe this is a bad idea, and I should not pursue it, or it’s too difficult, or there’s a lot of other people doing it, and they may do it better than me. I constantly remind people that a startup journey is hard, and you’ll keep having these thoughts and just have to persist. The thing that you have to remember is that it’s hard for anybody to go and build a great product. It takes a lot of effort and time, and you also have that time. If you persist, you’re going to do a great job. That’s my number one advice to any startup or founder out there.

The second piece of advice concerning AI is if you start to think of AI as your product, then you will fail. We don’t see AI as fundamentally any different from other technologies we use. For example, we use infrastructure technologies from cloud providers, and that’s a big enabler for us. I have built products in the pre-cloud era, and I know how the cloud has fundamentally changed the quality of the products we built and how easy it is to build scalable products.

AI should be no more than one of the building blocks you will use, and you still have to innovate outside of the models. You have to innovate to make workflows better for people, but something beyond that, hey, yeah, I can do something better, and therefore, I’m going to build a product.

Palak: Yeah, I think that makes a lot of sense and I love the customer centricity there, really figuring out what their needs are and building a product to best serve those needs rather than taking more of a technology-first approach and taking a hammer and sort of looking for nails.

To keep on this AI trend a little bit more, I think every Fortune 500 CEO this year was asked by their board, what is your AI strategy? And we’ve seen companies spin up AI skunkworks projects and evaluate a lot of early offerings. Naturally, a lot of founders and builders want to be a part of those conversations. I’m curious how you approach that at Glean and if you have more advice for founders looking to be a part of those conversations.

Arvind: In that sense, 2023 has been an excellent year for enterprise AI startups because you have this incredible push that you’re getting from the boardrooms where CIOs and leaders of these enterprises are motivated to experiment with AI technologies and see what they can do for their businesses. We have found it very helpful because it allows us to bring out technology. There’s more acceptance and urgency for somebody to try Glean and see what it does for them.

I’ve heard from enterprises that many of these experiments have yet to work out. A lot of POCs have failed, and so my advice to founders is to have an honest conversation with your customers, with the leaders that you’re trying to sell the product to. If you create an amazing demo, which is easy to create, and sell something you don’t have, you lose the opportunity and the credibility. It’s hard to bounce back from it. Even the enterprise leaders understand that, hey, this technology is new, it’s going to take time to mature, and they’re just looking for partners to work with who they feel have integrity and who have the ability to be on this journey with them and build products over time. That’s my advice to folks: be honest and share the vision, share the roadmap, and show some promise right now, and that’s enough. You don’t need to over-promise and under-deliver.

Palak: That will become the conversation in 2024: what’s the ROI on our AI strategy? From the enterprise leader’s perspective, who had mixed results trying out a POC, do they double down and stick with the effort? How do you think about that, and how are you seeing it from the Glean perspective?

Arvind: AI is a wave that you have to ride. For example, when cloud technology was just information, there was a lot of skepticism about it. “Okay, I’m not trusting my data is going into a data center that I don’t even have a key or to lock; I’m not going to do it.” Some companies were early adopters, and some companies adopted it late. Overall, the earlier you adopt these new technologies, the better you do as a company.

The AI technologies are now bigger in terms of the transformative impact they will have on enterprises or the industry as a whole than even the cloud. It’s an undeniable trend, and the technology is powerful. You have to invest in it, and you have to, as an enterprise leader, be willing to experiment. You don’t have to commit to spending a lot of money, but you have to see what’s out there, and put in that effort to embrace and adopt this technology.

Palak: Absolutely. I’d love to double-click on that a little bit more on AI being a bigger opportunity than cloud. I’d love to get a sense of where you think that is and what are some of these amazing experiences beyond our imaginations that you think will result out of this new wave of technology?

Arvind: A decade ago, it was about $350 billion in overall technology spend. It’s probably double that now. The cloud is worth 400 billion, which is more than all the tech spending that used to happen 10 or 15 years ago. AI impacts more than software; it impacts how services are delivered in the industry. For example, think about the legal industry and the kind of services that you get from them; what impact can AI have on those services? How can it make those services better? How can it make it more automated?

If you start to think about the overall scope of it, it feels much larger. It will fundamentally change how we work, and that’s our vision at Glean. The way we look at it today, take any enterprise: you have the executives, and they get the luxury of having a personal assistant who helps do half of their work. I have that luxury, too, where I get to tell somebody to do work for me, and they look at my calendar, they look at my email, they help me process all of it, and I have that help, and I feel like it’s unfair, I have it, but nobody else in our company has it. But AI is going to change all of that.

Five years from now, each one of us, regardless of what role we play in the company, how early we are in our career, we’re going to have a really smart personal assistant that’s going to help us do most of our work, most of the work that we do manually today. That’s our vision with Glean, that’s what we’re building with the Glean assistant.

Imagine a person in your company who’s been there from day one and has read every single document that has ever been written inside this company. They’ve been part of every conversation and meeting, and they remember everything, and then they’re ready for you 24/7. Whenever you have a question, they can answer using all of their company knowledge, and that’s the capability that computers have now. Of course, computers are always good at processing large amounts of information, but now they can also understand and synthesize it.

The impact of AI is going to be a lot more than what all of us are envisioning right now. We are trying to overestimate the impact in the next year, but we underestimate the impact that the technology will have in the next 10 or 20 years.

Palak: Just so you know, Arvind, when you were starting Glean, I remember because I was working on the enterprise search product at Microsoft, and I think you were cold messaging people on LinkedIn to try out Glean, and one of the people you happened to cold message was my dad who also went to IIT. And so it was just like a funny story.

Arvind: If I’m trying to solve a problem, I want to talk to the product’s users as much as possible. So, for example, even at Glean, I was the SDR No. 1. I spoke to hundreds and hundreds of people, whoever I could find, whoever had 10 minutes to spare for me, and asked them about, hey, I’m trying to build something like this. I’m trying to actually build a search product for companies. Does it make sense? Is it going to be useful to you?

The reason it’s so powerful when you do that exercise yourself, and you don’t stop, you don’t stop after you hire a couple of people in sales, you keep going is because it generates that immense sort of conviction for you in your mission. Talking to you earlier about how often the journey’s hard, and you start to question yourself on a bad day. But if you had done that research and talked to lots and lots of users, you can always go back to that and remember that, hey, no, I’ve talked to many people. This is the product that they want. This is a problem that needs to be solved. And so that’s what I always find very helpful for me.

Palak: Arvind, you’ve been in the technology industry for a very long time and have been a part of nearly every wave of technical disruption. What have you learned from each of these waves and how have you applied those learnings to AI?

Arvind: I think each one of these big technology advances that have happened over the last three decades, we’ve seen how that fundamentally creates opportunities for new companies to come in and bring new products that are better than the products that were built before when that technology was not available to them, to anybody. And so that’s one thing that I’ve always kept in mind. Whenever a big new technology wave comes, that’s the opportunity for any company, whether you are starting a new company or if you are a startup, you have to figure out how that is going to change things, how that is going to give you opportunities to build much better products than what was possible before and then go and work on it. My approach always has been to see these big technology advances as opportunities as opposed to thinking of them as being disruptions.

Palak: I’d love to get a sense of your personal journey as you’ve gone through each of those different waves. What are some of the products that you’ve innovated on and what are you so excited about building with Glean?

Arvind: I remember the first wave; I was in my second job, and we were starting to see the impact of the Internet on the tech industry and the business sector. I got to work on building videos on the web. It was incredible to allow people to watch video content directly on their laptops, machines, and the internet. We started with videos, and they would be so tiny because there was no bandwidth available on the internet for us to provide a full-screen experience. Regardless, it was still fundamental that there was no concept before that; hey, I can watch a video when I want.

Then, the next big thing we saw was mobile with the advent of smartphones and the iPhone, and it’s fundamentally changed again. At that time, I was working at Google, and it changed our ability, for example, to personalize results, personalize Google search, and personalize maps at a new level for our users than was possible before because now we knew where they are, we knew what they’re doing. Are they traveling, or are they steady at a place? Are they in a restaurant? And you can use that context to make products so much better.

We’re in the middle of this AI trend now, and our product is a search product for companies. The AI technology, especially the large language models, has given us this opportunity to make our product 10 times better. I think back to when somebody came and asked a question to Glean, we could point them to the most relevant documents inside the company that they could go and read and get the answer to whatever questions they had. But now we can use the LLM technology to take those documents, understand them using the power of LLMs, and quickly provide AI-generated answers to our users so they can save a lot more time.

It’s been really exciting to have that opportunity to use these big technology advances and quickly incorporate them in our products.

Palak: Yeah, I think that’s one thing that’s always really impressed me about Glean. As far back as 2019 or even before ChatGPT, Glean was probably everybody’s favorite AI product. I’m curious, how has Glean’s technical roadmap evolved alongside this rapid change of innovation over the last 12 months?

Arvind: We started in 2019, and in fact, we were using large language models in our product in 2019. The large language model technology got invented in some ways at Google, and the purpose of working on this was to make search better. So when we started, we had really good LLM models in open domain that we could use and then retrain them on enterprise corpuses of our customers and make it work for them to create a really good semantic search experience. But those models were not as good as you could put them in front of the users. And all of that started to happen in the last year where finally the models are so good that you can take the output of these models and put them right in front of the users.

So this has allowed us to first completely evolve our product. What used to feel like a really good search engine, something like Google inside your company now, it’s become a lot more conversational. It’s become an assistant that you can go and talk to and do a lot of things, so not just give it complicated instruction because we can follow complicated instruction using the power of large language model technology and then solve the problem that the user wants to solve. But we can go and really parse and comprehend knowledge at a level that we couldn’t ever before and also go beyond answering questions and actually do work for you.

One thing that we realized is that the AI technology is so powerful and there’s so many internal business processes that with the power of AI, you can make them much more efficient and better, and we’re not going to be, as a company, be able to actually fix all the things like go and solve all the AI problems, but our job has now become more of that, how can you use all of your company knowledge that we have at our disposal and then give tools to our customers so that they can bring AI into every single business workflow and generate efficiencies that have never been seen before.

Palak: I think that’s one of the things that from an outsider perspective, has made Glean such a great company, just how big the vision is and how you’re starting with the customer and working backward. I’m curious how you think about internalizing some of those philosophies within your company and how that sort of evolved and how the product’s evolving to this bigger, broader platform vision that you just alluded to.

Arvind: In an enterprise business, it’s very important for us to actually spend a lot of time with prospective customers and understanding different use cases that they have that they’re trying to actually solve as a business problem. All businesses are very different in some ways, and a big part of building products that can help a wide range of customers is actually spending the time at the forefront working with our customers and really understanding the scenarios there, understanding their data, and then trying to extract common patterns and common needs and then drive our product roadmap based on that.

Our product team spends a lot of time doing this process. Every quarter we would go and look at what are the top things we are hearing from our customers, and then of course, we do have our own vision of where we think the world is headed with all these new AI technologies. And so we combine those two things to actually come up with our quarterly roadmap and then execute on it.

The reason we started, for example, working on exposing our underlying technologies as a platform that businesses can go and build applications on was exactly that, that as we talked to so many, all these enterprises and they were all actually showing us that, hey, I want actually bring efficiency in my order workflow process, and this is how it works. And somebody else comes and tells us that, hey, I want, I’m getting a lot of requests for my HR service team, and I want help people build a self-server bot for all the employees in the company whenever they have HR questions. We start to listen to all of them and you realize that, oh, we can’t do, no idea what are all the things they want to go and solve.

Our job then became that, hey, can we give them the building blocks that can make it easy for them to then take this platform and build that value that they were looking for? Do a little bit of work on top of the product and platform that we provide to solve those specific business use cases.

We started building our AI platform for that reason, because AI is so broadly applicable across so many different businesses, so many different use cases, and it became very clear to us that we need that collaboration from our customers to really get full value.

Palak: Awesome, Arvind. So I have a few rapid fire questions as we wrap up. The first is, aside from your own, what intelligent application are you most excited about and why?

Arvind: GitHub Copilot is one of the applications. I see how our developers are able to use that, and there is a clear sign, from signal from them that this is a tool that’s truly improving the productivity.

Palak: Beyond AI, what trends, technical or non-technical are you most excited about?

Arvind: I’m really excited about the nature of work, how it is evolving rapidly and quickly, distributed work, the ability for people to work from wherever they are, so technologies that is helping us become more and more effective from working from our homes. That’s the thing that I’m really excited about and we’re going to see a lot more. Hopefully we’re going to see some things with telepresence, which makes working from anywhere the same as working from office.

Palak: Yeah, that’s a good one. Arvind, thank you so much for taking the time. It was really a pleasure to have you on.

Arvind: Thank you so much for having me.

Coral: Thank you for listening to this week’s IA40 spotlight episode of Founded & Funded. We’d love to have you rate and review us wherever you listen to your podcasts. If you’re interested in learning more about Glean, visit www.Glean.com. If you’re interested in learning more about the IA40, visit www.ia40.com. Thanks again for listening and tune in a couple of weeks for our next episode of Founded & Funded.