This is the third in our series of four deep dives into our technology based investing themes – outlined in January.

Computers, and the ways in which we interact with them, have come a long way. Pre-1984, the only way to navigate a computer was by using a keyboard. Then Apple (with help from Xerox Parc) came along and introduced the world to the mouse. Suddenly, a whole host of activities became possible such as free-form graphical drawing. While this may seem trivial now, at the time it allowed for the new expansion of industries such as graphic design, publishing, and digital media, and the world hasn’t looked back since.

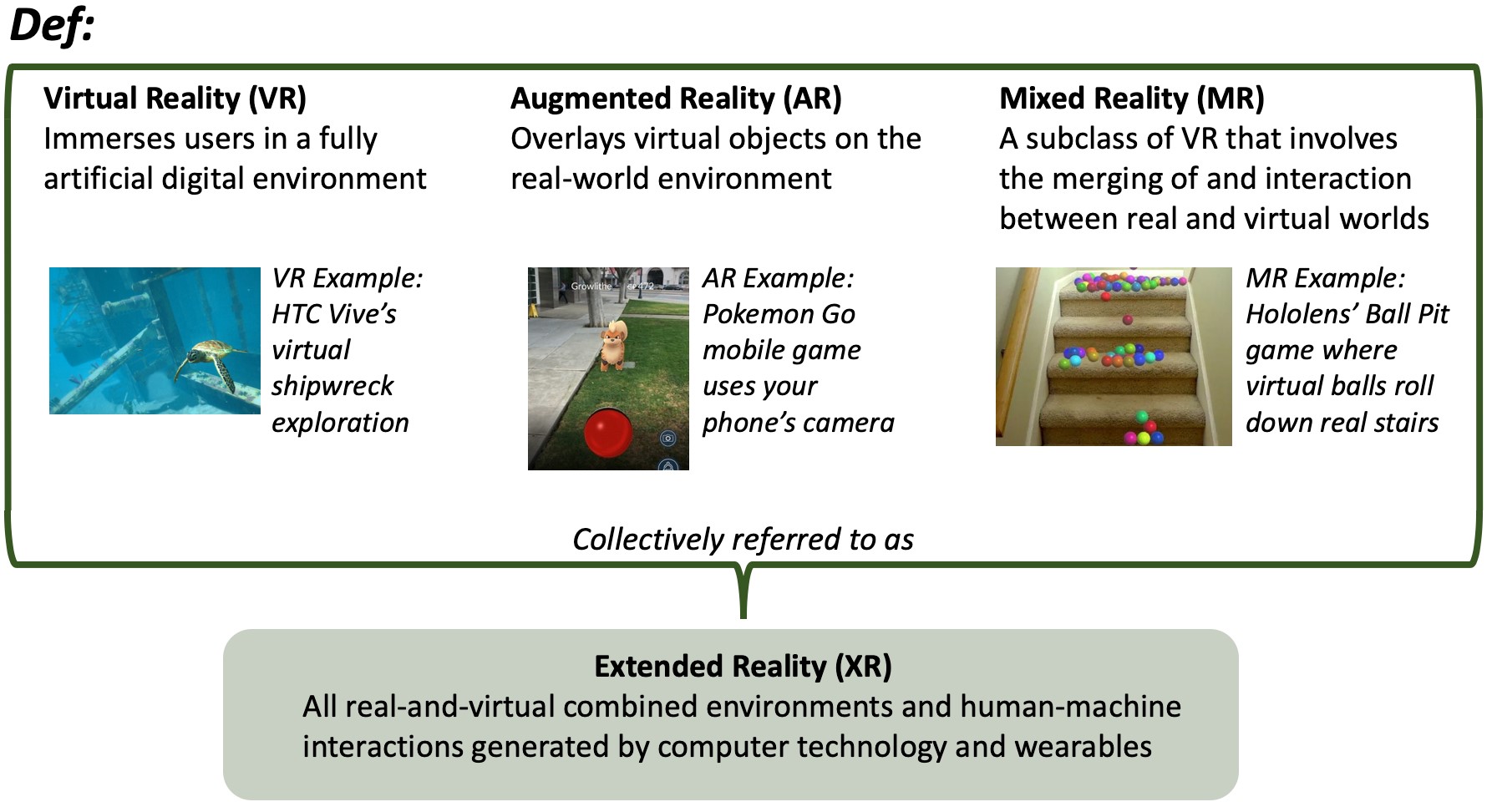

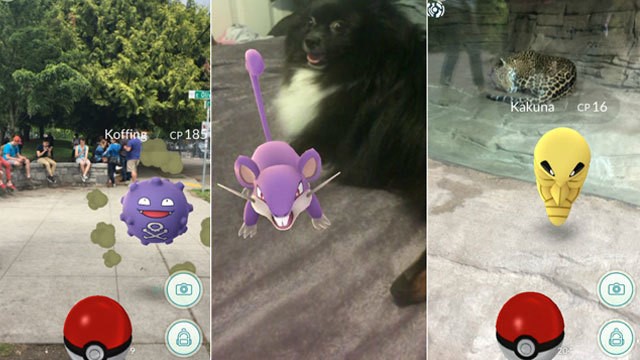

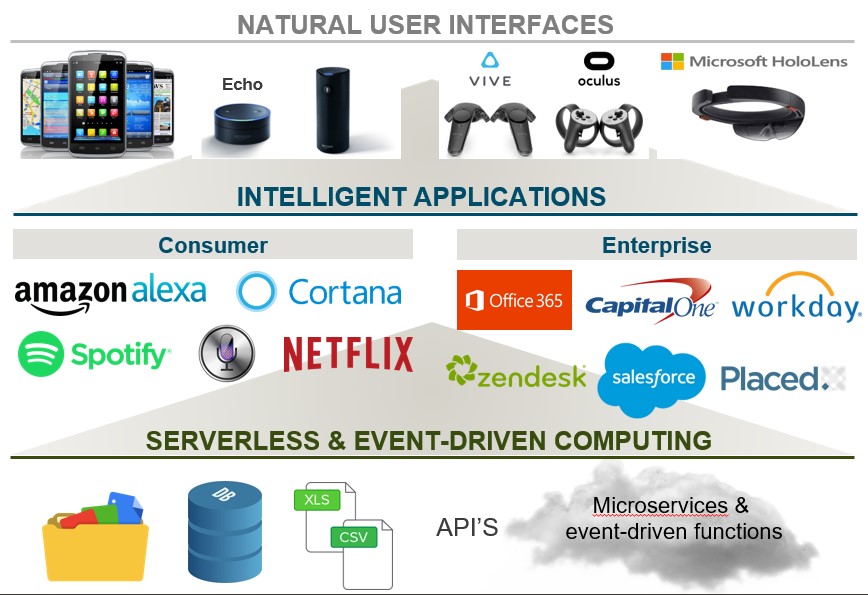

Fast forward to the present: we are seeing an awe-inspiring number of ways that technology is continuing to advance the user interface between humans and computers. Augmented reality, virtual reality, mixed reality (collectively “extended reality,” or XR), voice, touch screens, haptics, gestures, and computer vision, to name a few, are developing and will change the computing experience as we know it. In addition, they will create new industries and business opportunities in ways that we cannot yet imagine. At Madrona we are inspired by what is possible and ready to provide capital and company-building help for the next generation of computer interaction modalities.

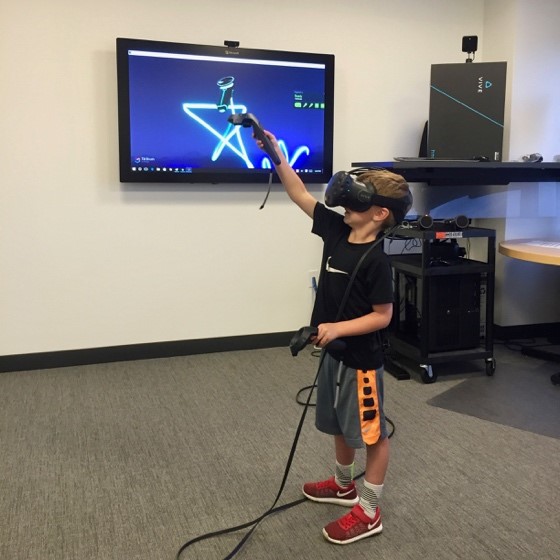

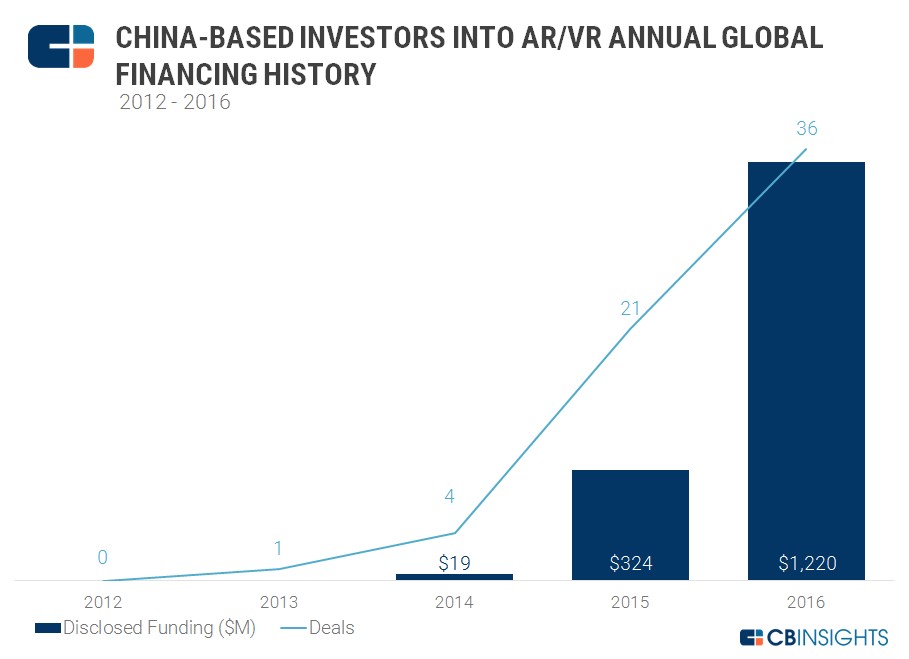

We, along with many others in the industry, were wowed by the magic of VR specifically and dove in early with a couple key investments in platforms – some worked and some didn’t. We quickly came to realize that VR headset adoption wasn’t going to be as fast as initially predicted but we remain strong believers in the ability of all types of next generation user interfaces to change how we experience technology in our lives.

Last month we published our main investment themes, including a summary of our belief in next gen UI. Here is a deep dive into our updated take on the future of both voice and XR technologies.

Voice technology is becoming ubiquitous

Voice tech, more so than any other new UI in recent history, has reached consumers swiftly and relatively easily. While the most common use cases remain fairly basic, voice tech’s affordability, cross-operating system capabilities, and ease-of-use have helped drive adoption. The tech giants are pouring billions into turning their voice platforms and assistants such as Amazon Alexa, Google Assistant, and Apple’s Siri into sophisticated tools designed to become an integral part of our daily lives. Driven by their affordability (the Amazon Echo Dot sells for $30) and ease of use (all you need to do is speak), voice-enabled devices are becoming ubiquitous. Amazon recently reported that 100 million Alexa devices have been sold. Add to that the over 2 billion Apple iOS devices that come pre-loaded with Siri and the almost 1 billion (mostly Android phone) devices that come pre-loaded with Google Assistant, and it is clear that voice technology as a platform has reached unprecedented scale and market penetration in a very short period of time.

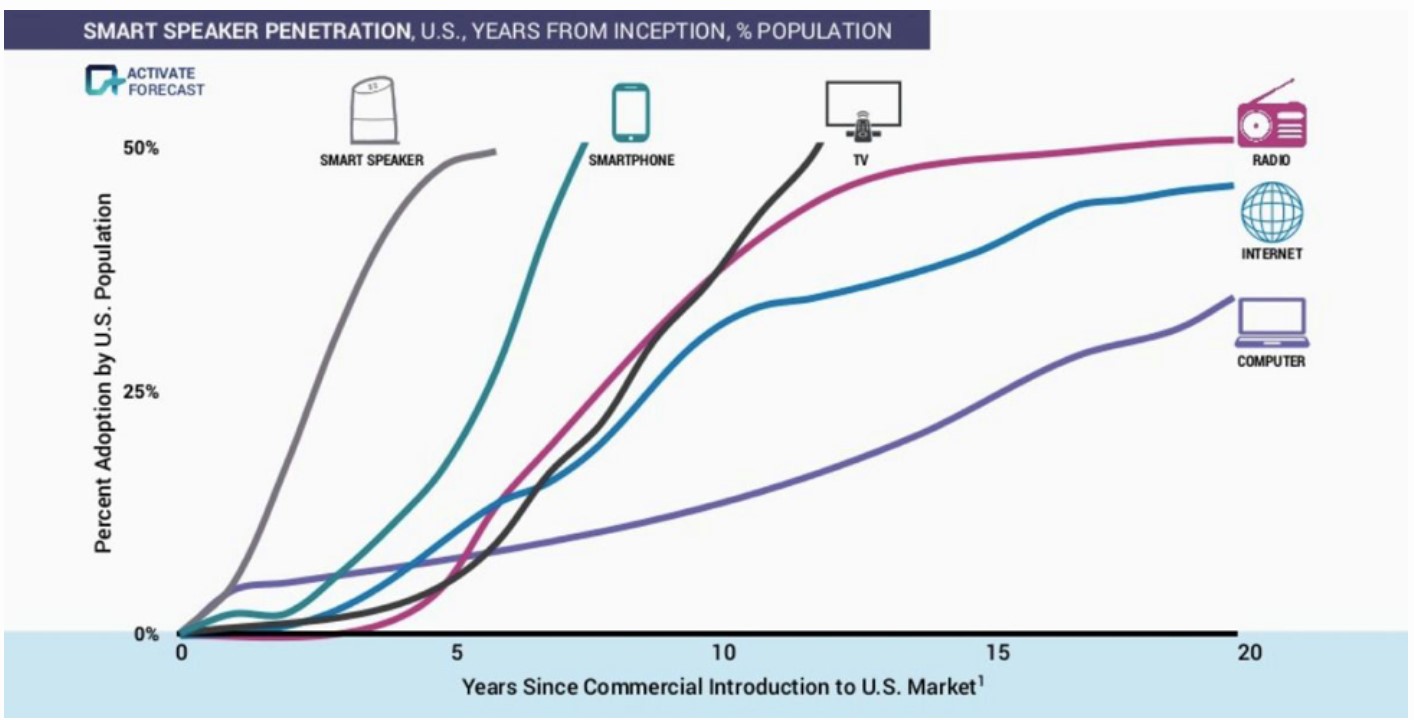

Figure 1: Smart speakers are showing the fastest technology adoption in history Source: https://xappmedia.com/consumer-adoption-voice/

The key question now: how will voice become a platform for other businesses? In order for new business models to thrive off of voice technology, we think three things have to happen:

(1) Developers need new tools for creating, managing, testing, analyzing, and personalizing the voice experience

(2) Marketers need new tools for monetization and cross-device and cross-platform brand/content management, and

(3) Businesses need to adapt to a voice-enabled world and enhance their products with voice-enabled intelligence, performance, and productivity.

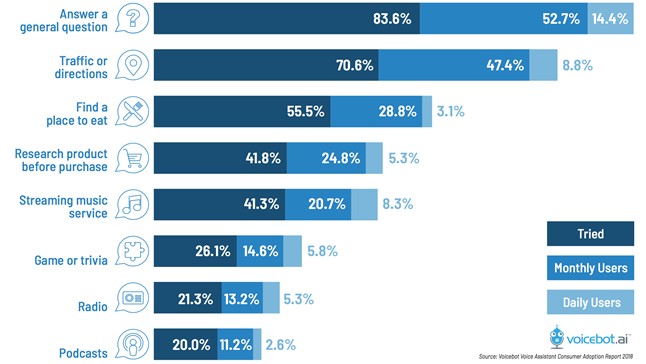

Similar to the early days of web and mobile, the number of voice applications is growing fast but monetization is nascent and users sometimes struggle with discoverability. For example, Alexa offers over 80,000 “skills” but if you ask most Alexa owners how they use their device, you may notice that use cases remain fairly high-level:

Figure 2: Voice assistants largely used for information & entertainment use cases. Source: https://voicebot.ai/voice-assistant-consumer-adoption-report-2018/

The next generation of voice is multi-modal so instead of using voice-only devices, we are moving toward a great wave of “voice-also” devices. Examples of this include Volkswagen voice recognition to make calls, Walmart.com voice shopping, Roku device voice commands for watching TV, Nest smart thermostat products that use Google Assistant, etc. Tech giants and startups alike are racing to integrate voice into everything, from your car “infotainment” system to your microwave and screen-first devices so they can leverage the ease-of-use of voice to unlock new functionality.

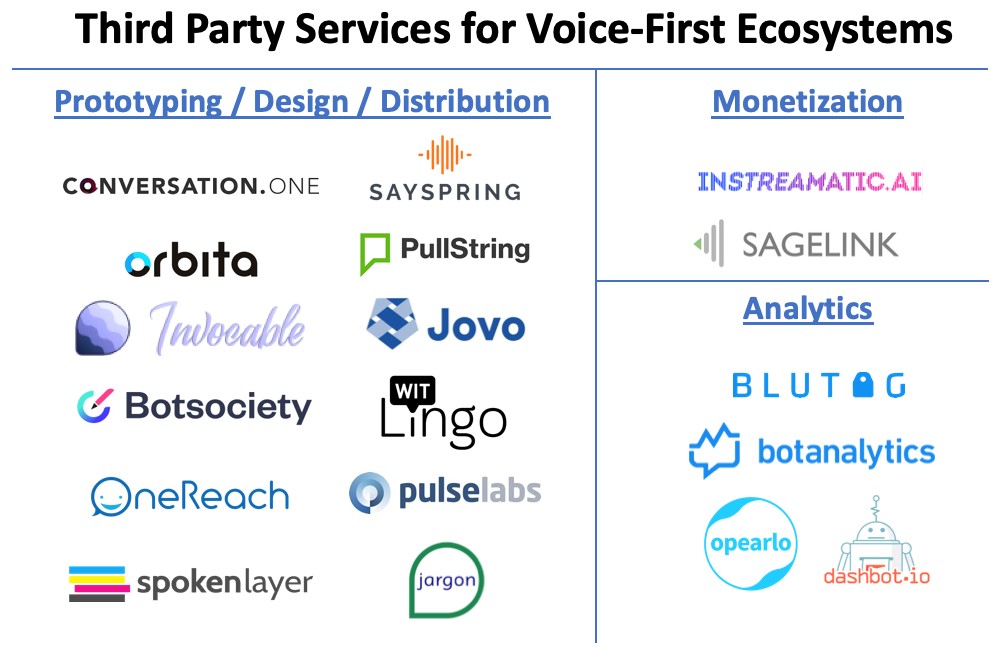

Figure 3: Many companies are springing up to help businesses create, monitor, and monetize voice applications

At Madrona, our investments in Pulse Labs and Saykara give us unique visibility into next gen voice applications and how businesses are looking to reach users with voice services. We believe that voice tech will enable new business models centered around e-commerce and advertising via multi-modal experiences. We see opportunities in creating a tools layer for voice developers and marketers as well as building intelligent vertical applications to solve specific problems. Opportunities exist in enhancing in-vehicle voice capabilities, integration across platforms and applications, home security systems, smart thermostats, and retail experiences that blend the digital and physical, to name a few.

Overall, voice technology is moving very quickly toward broad adoption. With the incredible amount of investment being put into voice technologies, both from the platform providers and from new software developers, we are looking forward to seeing breakthroughs in e-commerce, advertising, and multi-modal experiences. The first hurdle of creating an ecosystem has been cleared with voice-capable devices now in the hands (and homes) of millions of users. The true test will be finding ways to use that technology to solve a broader array of business and consumer problems, and to monetize those capabilities as impact grows.

XR interfaces – still slowly building momentum

XR is a big bet that we believe in long term but our initial estimates of a 3-5-year timeline for when it would hit critical mass (which would have been 2019-2021) were overly optimistic. As we kick off 2019, we have the benefit of time, experience in the market, and a greater appreciation for what it will take for this industry to reach the masses. Our best estimates now push mainstream adoption of ‘premium VR’ (full headset/PC) back another five years to 2024.

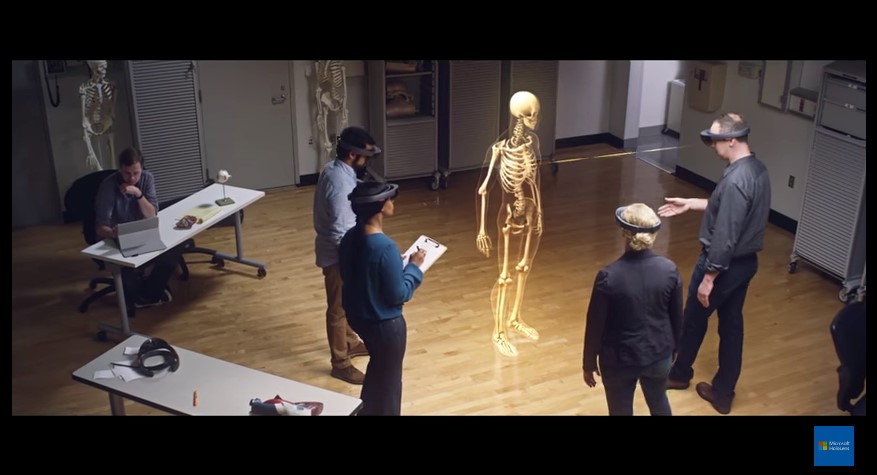

Microsoft’s HoloLens, which was released in late 2016, had only sold approx. 50,000 units by mid-2018 before its 100,000-unit deal with the US army was reported in Nov 2018. Hololens 2.0 is slated to be revealed later this month and so we look forward to learning more then. The much-hyped Magic Leap launched a developer product in Aug 2018, but that was not designed as a mass release. In addition, sales of advanced PC-tethered headsets amount to less than 5 million units. Why the slower-than-anticipated uptake? Mass adoption is facing many headwinds on both sides of the marketplace.

On the consumer side: head mounted devices (HMDs) are expensive (around $600 per headset + upwards of $1500 for the high-end PC needed to run the programs). Usability is also a challenge – people are still getting comfortable with the feeling of wearing HMDs for long periods of time. On top of this, there are many additional opportunities for better XR accessories (E.g., the ability to use foot pedals or “cyber shoes,” improved hand controllers, and other devices such as activity or workout aides or equipment) which will improve the immersive experience. On the brand/business side: developing and implementing a worthwhile offering in XR requires more time, resources, and patience than originally thought. In addition, when done right, XR experiences should provide something specific and unique to the business that cannot be achieved otherwise. Finally, XR platforms aren’t standardized yet and so development requires significant customization. Altogether these factors have contributed to the XR market being sub-scale. We ask ourselves now, how many millions of these HMDs need to exist, and what applications or what business verticals will need to be developed, in order for XR technology to become a self-supporting industry?

Most XR innovation to date is entertainment-based, with different approaches for attracting new users. For example, HTC’s Vive has VR rooms where you can watch whales and sea life and there are cross-platform games where you can use lightsabers to slice through oncoming hazards. Against Gravity’s Rec Room is full of communities where you can build your own virtual rooms and worlds.

A trend we’re seeing now, while the XR consumer base slowly builds, is the emergence of virtual worlds or “metaverses” (collective virtual shared spaces that are created by the convergence of virtually-enhanced physical reality and persistent virtual space) that are at first, but may not always be, primarily non-XR virtual experiences (E.g., Xbox, Twitch, mobile, or YouTube). The key here is gaining a mass following while maintaining a “call option” on the ability to allow users to flip over to an XR interface as soon as they are ready. For example, Against Gravity’s Rec Room has quests that are available in both VR and non-VR, and Epic Games’ Fortnite just successfully hosted a record-breaking live, mixed-reality in-game concert with pop DJ Marshmello, the success of which sets the stage for more XR possibilities in the future. Entertainment-based applications like these will be the pathway to mass XR adoption.

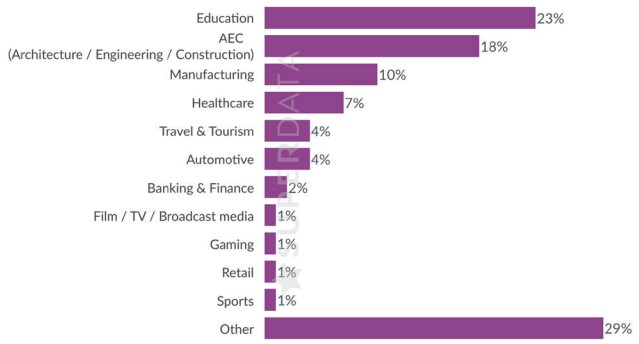

Beyond entertainment, there are many practical use cases for XR applications that we are excited about. Our investments in virtual reality startups Pixvana and Pluto help us follow customer needs and market movements, and one that we have been watching closely is the growing opportunity of XR adoption within enterprise and commercial use cases. Of note, we have seen an especially high number of applications in the medical training, retail, education, and field service categories. This is supported by the overall trends as reported by VRRoom, pictured below.

Figure 5: Industries of current and prospective XR end-users. Source: https://vrroom.buzz/sites/default/files/xr_industry_survey_results.pdf

XR has the capability to virtually “place shift”/transport the user and enable many compelling applications such as mixed-reality entertainment, medical/surgical training, field service equipment repair and maintenance, spatial design/modeling (E.g., architecture), remote education, experiential travel/events, and public safety activities, to name just a few. Imagine a world where you could shrink and zoom a virtual mockup of a new building with the flick of a finger to help design the HVAC system, you could participate in a Nascar race from the comfort of your living room, or you could literally join your far-flung friends for an “in person” hangout in a virtual living room – this is the power of XR, and it is not far off. The challenge now is to provide greater variety in content, low-cost hardware, and improved usability and comfort to consumers (we’re looking forward to the rumored lightweight ‘glasses’ under development). In addition, we are supportive of the other mediums that XR companies can invest in now (such as mobile, YouTube, PC, etc.) that can provide a bridge to a full premium XR experience in the future.

Together, these next gen user interfaces will feel more natural and make it easier for consumers to access features in a new way

New ‘interaction modes’ like voice, XR, and others will create compelling user experiences that both improve existing experiences and create new ones that previously weren’t possible. We are excited to work with entrepreneurs as they innovate in these new areas. Opportunity for new applications, enabling technologies/devices, and content creation tools/platforms in next gen user interfaces will take us to the future.